Enterprise LLM Fine-Tuning Strategy: From Foundation Models to Competitive Advantage

Introduction

The enterprise AI landscape has reached an inflection point. Foundation models from Anthropic, OpenAI, Google, and Meta now power thousands of production applications, yet most enterprises struggle with a fundamental question: when does prompt engineering hit its limits, and when does fine-tuning become the strategic imperative?

This isn’t merely a technical decision. Fine-tuning represents a significant investment in data curation, compute resources, and organisational capability. Get it right, and you create a moat around domain-specific AI capabilities. Get it wrong, and you’ve burned capital on a model that underperforms careful prompting.

After guiding multiple enterprise clients through this decision framework, clear patterns have emerged that separate successful fine-tuning initiatives from expensive failures.

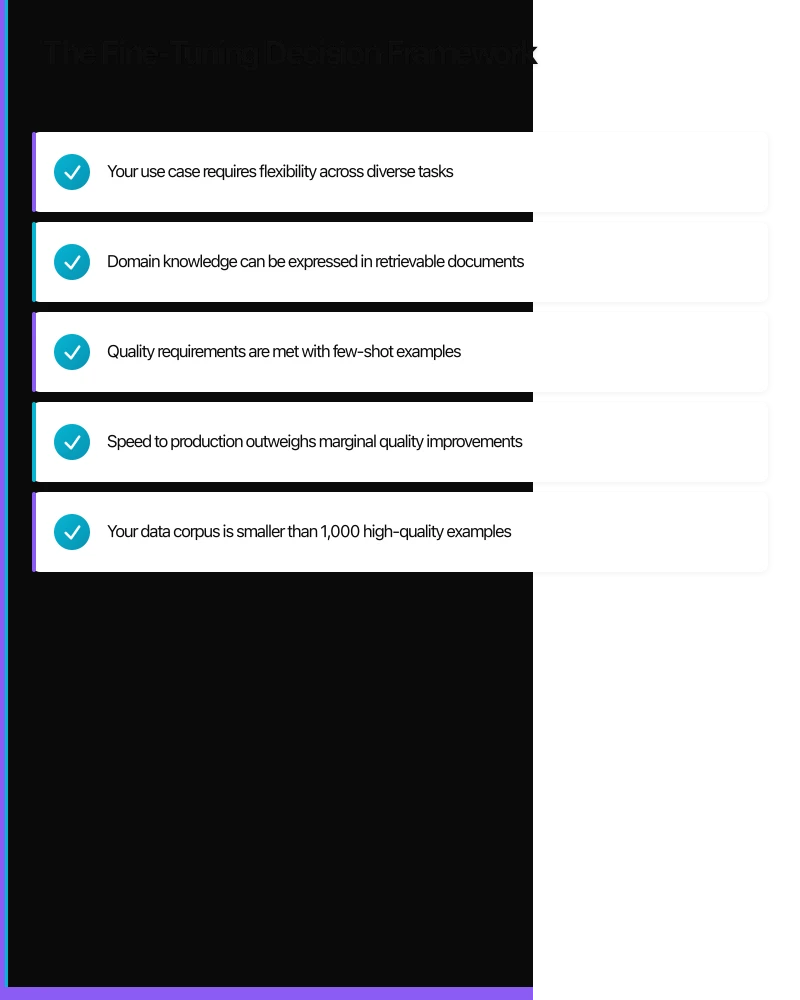

The Fine-Tuning Decision Framework

When Prompting Suffices

Before committing to fine-tuning, honestly assess whether sophisticated prompting can achieve your objectives. Modern foundation models with context windows exceeding 100,000 tokens can absorb substantial domain knowledge through well-crafted prompts.

Prompting works well when:

- Your use case requires flexibility across diverse tasks

- Domain knowledge can be expressed in retrievable documents

- Quality requirements are met with few-shot examples

- Speed to production outweighs marginal quality improvements

- Your data corpus is smaller than 1,000 high-quality examples

Many enterprises rush to fine-tuning before exhausting prompting potential. Retrieval-Augmented Generation (RAG) combined with structured prompting handles 80% of enterprise use cases without model modification.

When Fine-Tuning Becomes Essential

Fine-tuning delivers value in specific scenarios where prompting fundamentally cannot compete:

Consistent Style and Voice: Financial services firms requiring precise regulatory language, healthcare organisations needing specific clinical terminology, or brands demanding unwavering voice consistency benefit from fine-tuned models that internalise these patterns.

Latency-Critical Applications: Fine-tuned models can produce desired outputs with shorter prompts, reducing inference latency. For real-time customer interactions where every 100 milliseconds matters, this advantage compounds.

Cost Optimisation at Scale: When processing millions of requests monthly, the token savings from shorter prompts compound dramatically. A fine-tuned model requiring 500 fewer tokens per request saves substantial costs at enterprise scale.

Proprietary Knowledge Integration: When your competitive advantage stems from proprietary methodologies, processes, or domain expertise that cannot be adequately expressed in retrieval documents, fine-tuning embeds this knowledge directly.

Compliance and Consistency Requirements: Regulated industries often require demonstrable consistency in AI outputs. Fine-tuned models provide more predictable behaviour than prompt-based approaches.

The Enterprise Fine-Tuning Landscape in 2025

Platform Options

The fine-tuning ecosystem has matured significantly this year, offering enterprises multiple pathways:

AWS Bedrock Custom Models: Bedrock now supports fine-tuning for Claude, Llama, and Titan models with native integration into AWS security and governance frameworks. The managed approach simplifies operations while maintaining enterprise controls.

Azure OpenAI Fine-Tuning: Microsoft’s offering provides GPT-4 fine-tuning with enterprise security features, though token limits and training data requirements remain more restrictive than alternatives.

Google Vertex AI: Gemini model fine-tuning through Vertex AI offers strong integration with BigQuery for training data preparation and Cloud IAM for access control.

Open Source Pathways: Llama 3.1 and Mistral models enable on-premises fine-tuning for organisations with strict data sovereignty requirements. The operational overhead is substantial but governance benefits can outweigh costs.

Technique Evolution

Fine-tuning techniques have advanced beyond full model retraining:

LoRA and QLoRA: Low-Rank Adaptation enables efficient fine-tuning by training small adapter layers rather than modifying base model weights. This reduces compute requirements by 80-90% while achieving comparable results for many use cases.

Continued Pre-Training: For domain-specific applications, continued pre-training on industry corpora before task-specific fine-tuning produces stronger results than fine-tuning alone.

Reinforcement Learning from Human Feedback (RLHF): Enterprise RLHF pipelines now enable fine-tuning models to align with organisational preferences, compliance requirements, and quality standards through structured human feedback loops.

Strategic Planning for Enterprise Fine-Tuning

Data Requirements and Preparation

The quality of fine-tuning outcomes correlates directly with training data quality. Enterprises often underestimate the investment required.

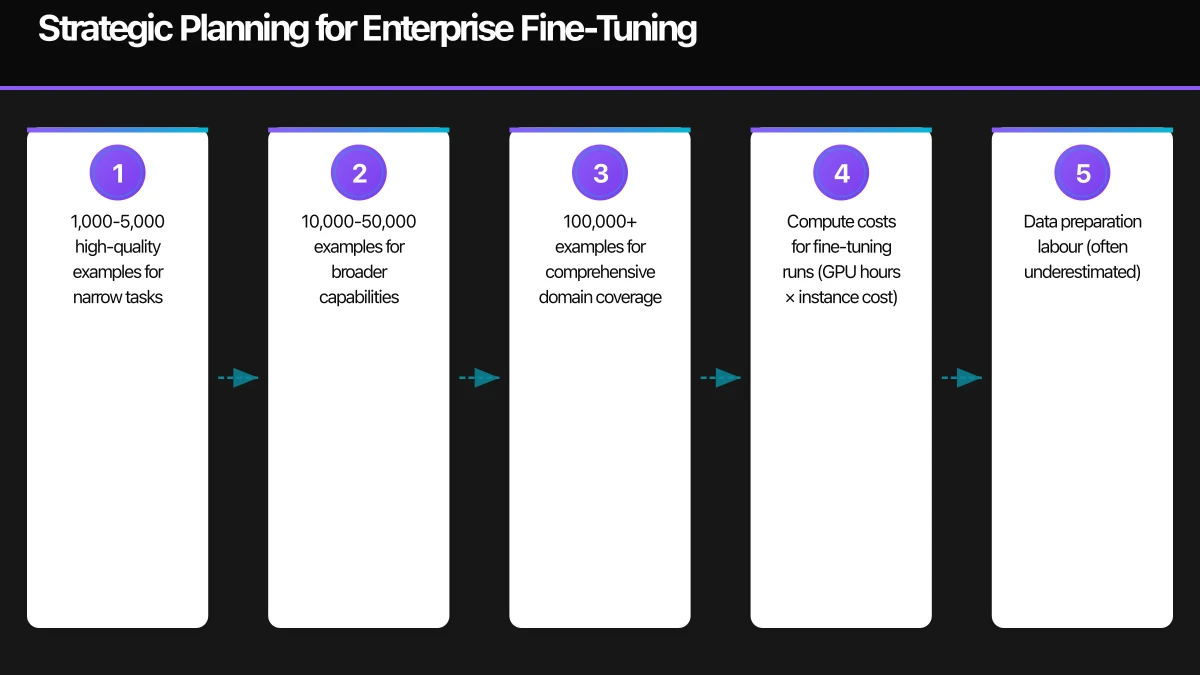

Minimum Viable Dataset

For instruction fine-tuning, plan for:

- 1,000-5,000 high-quality examples for narrow tasks

- 10,000-50,000 examples for broader capabilities

- 100,000+ examples for comprehensive domain coverage

Quality matters more than quantity. One hundred expertly curated examples outperform ten thousand noisy samples.

Data Curation Process

Establish a rigorous curation pipeline:

-

Source Identification: Map existing data assets that demonstrate desired model behaviour. Customer service transcripts, expert-written documents, and validated outputs from existing systems provide starting points.

-

Quality Filtering: Remove noise, errors, and edge cases that don’t represent desired behaviour. Automated filtering catches obvious issues; human review ensures nuance.

-

Format Standardisation: Convert source data into consistent instruction-response pairs. Inconsistent formatting degrades fine-tuning effectiveness.

-

Diversity Validation: Ensure training data covers the distribution of inputs your model will encounter. Gaps in training data become gaps in model capability.

-

Expert Review: Domain experts should validate a substantial sample of training data. Experts catch subtle issues that automated checks miss.

Governance Framework

Fine-tuned models require enhanced governance beyond base model usage:

Model Versioning and Lineage: Track which training data produced which model versions. When issues arise—and they will—you need clear lineage for investigation.

Evaluation Benchmarks: Establish task-specific benchmarks before fine-tuning begins. Without baseline measurements, you cannot quantify improvement or detect regression.

Drift Monitoring: Fine-tuned models can drift as base models update or as input distributions shift. Implement continuous evaluation against held-out test sets.

Rollback Procedures: Maintain the ability to revert to previous model versions or fall back to base models with prompting. Production incidents require rapid response.

Access Controls: Fine-tuned models may embed proprietary knowledge. Apply appropriate access controls and audit logging.

Cost Modelling

Fine-tuning economics require careful analysis:

Training Costs

- Compute costs for fine-tuning runs (GPU hours × instance cost)

- Data preparation labour (often underestimated)

- Iteration cycles (plan for 3-5 training runs minimum)

- Evaluation and validation effort

Inference Costs

- Per-token inference pricing for fine-tuned models

- Hosting costs for self-managed deployments

- Comparison against base model with longer prompts

Total Cost of Ownership

- Ongoing model maintenance and retraining

- Governance and compliance overhead

- Team capability development

Build a financial model comparing fine-tuning TCO against enhanced prompting before committing. Many organisations find prompting more cost-effective until they reach significant scale.

Implementation Roadmap

Phase 1: Assessment and Planning (Weeks 1-4)

Week 1-2: Use Case Validation

- Document specific use cases where prompting falls short

- Quantify the gap between current and required performance

- Identify success metrics for fine-tuning initiative

Week 3-4: Data Audit

- Inventory available training data sources

- Assess data quality and coverage

- Estimate curation effort required

- Identify data gaps requiring collection

Phase 2: Infrastructure and Data Preparation (Weeks 5-10)

Week 5-6: Platform Selection

- Evaluate fine-tuning platforms against requirements

- Establish security and compliance configurations

- Configure training pipelines and tooling

Week 7-10: Data Curation

- Execute data extraction and transformation

- Implement quality filtering

- Conduct expert review cycles

- Prepare evaluation datasets

Phase 3: Fine-Tuning Iterations (Weeks 11-16)

Week 11-12: Baseline Establishment

- Measure base model performance on evaluation benchmarks

- Document prompting performance ceiling

- Establish success thresholds

Week 13-16: Training Cycles

- Execute initial fine-tuning run

- Evaluate against benchmarks

- Iterate on hyperparameters and data

- Document learnings from each cycle

Phase 4: Production Deployment (Weeks 17-20)

Week 17-18: Integration Development

- Integrate fine-tuned model into application architecture

- Implement guardrails and monitoring

- Load test at production scale

Week 19-20: Controlled Rollout

- Deploy to limited user population

- Monitor performance and gather feedback

- Iterate based on production behaviour

- Graduate to full production

Measuring Fine-Tuning Success

Technical Metrics

Task Performance

- Accuracy on held-out evaluation sets

- Performance on domain-specific benchmarks

- Comparison against base model baseline

Operational Metrics

- Inference latency (p50, p95, p99)

- Token efficiency (tokens per request)

- Error rates and failure modes

Business Metrics

Efficiency Gains

- Time saved per task

- Throughput improvements

- Human review reduction

Quality Improvements

- Output quality scores (human evaluation)

- Consistency measurements

- Error and revision rates

Financial Impact

- Cost per transaction versus baseline

- ROI on fine-tuning investment

- Scale economics realisation

Common Pitfalls and Mitigation Strategies

Pitfall: Insufficient Data Quality

Many organisations rush to fine-tuning with whatever data is available. Poor data quality produces models that confidently generate incorrect outputs.

Mitigation: Invest 60% of project time in data curation. Implement multiple quality gates. Accept that high-quality training data is the primary bottleneck.

Pitfall: Overfitting to Training Distribution

Fine-tuned models can become brittle, performing well on inputs similar to training data while failing on edge cases.

Mitigation: Ensure training data diversity. Maintain held-out evaluation sets that differ from training distribution. Test adversarially before production.

Pitfall: Neglecting Base Model Updates

Foundation models improve continuously. Fine-tuned models based on older base versions miss these improvements.

Mitigation: Plan for periodic retraining on updated base models. Budget for this in ongoing operations. Monitor base model release cycles.

Pitfall: Underestimating Operational Complexity

Fine-tuned model deployment adds operational complexity: model versioning, A/B testing, rollback procedures, and drift monitoring.

Mitigation: Build operational infrastructure before fine-tuning begins. Treat this as a capability, not a one-time project.

Pitfall: Security Lapses in Training Data

Training data often contains sensitive information. Fine-tuned models can memorise and regurgitate this content.

Mitigation: Audit training data for PII and sensitive content. Implement differential privacy techniques where appropriate. Test for memorisation before deployment.

The Competitive Advantage Question

Fine-tuning creates competitive advantage when it embeds genuinely proprietary knowledge or capabilities. Consider these questions:

-

Is the knowledge proprietary? If your training data consists of public information, competitors can replicate your fine-tuned model. True advantage requires proprietary data or methodologies.

-

Is the advantage durable? Foundation models improve rapidly. Fine-tuning advantages erode as base models advance. Continuous investment maintains the edge.

-

Can competitors catch up? If your fine-tuned model requires years of accumulated data to replicate, the moat is substantial. If it requires months, the advantage is tactical, not strategic.

-

Does scale compound the advantage? Fine-tuned models that improve with usage data create flywheel effects. Static models provide temporary advantages.

The most durable competitive advantages combine proprietary training data, continuous improvement processes, and deep integration into business workflows that competitors cannot easily replicate.

Conclusion

Enterprise LLM fine-tuning has matured from experimental technique to strategic capability. The decision to fine-tune requires rigorous analysis of use case requirements, data availability, cost implications, and competitive dynamics.

For most enterprises, the path forward involves:

- Exhaust prompting and RAG potential before fine-tuning

- Identify specific use cases where fine-tuning demonstrably outperforms

- Invest heavily in training data quality

- Build operational infrastructure for model lifecycle management

- Plan for continuous improvement, not one-time projects

The enterprises creating durable AI advantages are those treating fine-tuning as a strategic capability requiring sustained investment—not a silver bullet for AI challenges.

The foundation models will continue improving. The platforms will become more accessible. The techniques will evolve. What remains constant is the need for strategic thinking about when and how to customise these capabilities for competitive advantage.

Start with the business problem. Let the technical approach follow.

Sources

- Anthropic. (2025). Claude Model Fine-Tuning Guide. Anthropic Documentation. https://docs.anthropic.com/claude/docs/fine-tuning

- AWS. (2025). Amazon Bedrock Custom Models. Amazon Web Services. https://docs.aws.amazon.com/bedrock/latest/userguide/custom-models.html

- Hu, E. J., et al. (2021). LoRA: Low-Rank Adaptation of Large Language Models. arXiv. https://arxiv.org/abs/2106.09685

- Google Cloud. (2025). Vertex AI Model Tuning. Google Cloud Documentation. https://cloud.google.com/vertex-ai/docs/generative-ai/models/tune-models

- McKinsey & Company. (2025). The State of AI: Enterprise Adoption Trends. McKinsey Global Institute. https://www.mckinsey.com/capabilities/quantumblack/our-insights/the-state-of-ai

- OpenAI. (2025). Fine-Tuning Best Practices. OpenAI Documentation. https://platform.openai.com/docs/guides/fine-tuning

Strategic guidance for enterprise technology leaders navigating AI transformation.