Building Enterprise AI/ML Feature Stores

Introduction

As enterprises move beyond individual machine learning experiments to production ML systems at scale, a common bottleneck emerges: feature engineering. Data scientists across the organisation independently build and rebuild the same features from raw data, creating duplication of effort, inconsistency between training and serving environments, and a growing maintenance burden as the number of models in production increases.

Feature stores have emerged as the architectural answer to this challenge. A feature store is a centralised platform for defining, computing, storing, and serving ML features, ensuring consistency between training and inference while enabling feature reuse across teams and models. Companies like Uber (Michelangelo), Airbnb (Zipline), and Spotify have pioneered feature store architectures, and the pattern is now being adopted broadly across the enterprise landscape.

For CTOs overseeing enterprise ML programmes, the feature store represents a critical infrastructure investment. It sits at the intersection of data engineering, ML engineering, and platform engineering, and its quality directly affects the velocity, reliability, and governance of the organisation’s ML systems.

The Feature Engineering Challenge at Enterprise Scale

Feature engineering, the process of transforming raw data into the inputs that ML models consume, typically accounts for sixty to eighty percent of the effort in building ML systems. This disproportionate investment reflects the reality that ML model performance depends as much on the quality and relevance of input features as on the sophistication of the model architecture.

At enterprise scale, several problems compound this challenge. Feature duplication occurs when multiple data science teams independently compute similar features from the same raw data. A customer churn model, a cross-sell recommendation model, and a fraud detection model may all need features derived from customer transaction history, yet each team builds its own feature pipeline because there is no systematic mechanism for discovering and reusing existing features.

Training-serving skew is a subtle but pernicious problem. Features used during model training are often computed using batch data processing tools (like Spark or SQL queries against a data warehouse), while the same features in production must be computed in real-time from streaming data. If the training and serving feature computation logic diverges, even slightly, model performance in production degrades relative to training benchmarks. This divergence is difficult to detect and is one of the most common causes of ML system failures.

Feature freshness requirements vary across use cases. Some features, like a customer’s lifetime purchase total, change slowly and can be computed in daily or hourly batch processes. Others, like the number of transactions in the past five minutes, must be computed in real-time from streaming data. Supporting both batch and real-time features within a consistent framework is an architectural challenge that ad hoc feature pipelines typically fail to address.

Governance and lineage become critical as the number of features and models grows. Which models depend on which features? What raw data sources contribute to each feature? How has a feature’s computation logic changed over time? Without systematic tracking, answering these questions requires manual investigation, making compliance, debugging, and impact analysis prohibitively expensive.

Feature Store Architecture

A comprehensive feature store architecture encompasses four core components: feature definition and transformation, offline storage, online storage, and feature serving.

Feature definition and transformation provide the interface through which data engineers and data scientists define features. A feature definition specifies the raw data sources, the transformation logic, and the output schema. Well-designed feature stores support feature definitions as code, enabling version control, review, testing, and CI/CD integration. The transformation logic may be expressed in SQL, Python, or a domain-specific language, depending on the platform.

The transformation engine executes feature definitions to produce feature values. For batch features, this typically involves Spark, Flink, or SQL-based processing against the data lake or data warehouse. For real-time features, stream processing frameworks like Apache Flink or Kafka Streams compute feature values from event streams with low latency. A unified transformation framework that supports both batch and streaming computation from the same feature definition is the gold standard, as it eliminates training-serving skew by construction.

Offline storage houses the historical feature values used for model training. This is typically a data lake or data warehouse optimised for large-scale analytical queries. Features are stored as time-series data, with each feature value associated with an entity (like a customer or transaction) and a timestamp. This temporal structure enables point-in-time correct feature retrieval for training: when building a training dataset, features are joined as of the timestamp of each training example, preventing data leakage from future feature values.

Online storage provides low-latency access to the most recent feature values for production inference. This is typically a key-value store like Redis, DynamoDB, or Cassandra that can serve feature lookups in single-digit milliseconds. The online store is populated from the offline store through materialisation pipelines that update feature values as new data arrives.

Feature serving provides the API through which models request features at inference time. The serving layer looks up the requested features for the specified entity from the online store and returns them in the format expected by the model. This layer should also support feature transformations that must be computed at request time, such as features that depend on the specific inference request context.

Technology Landscape and Build-Versus-Buy

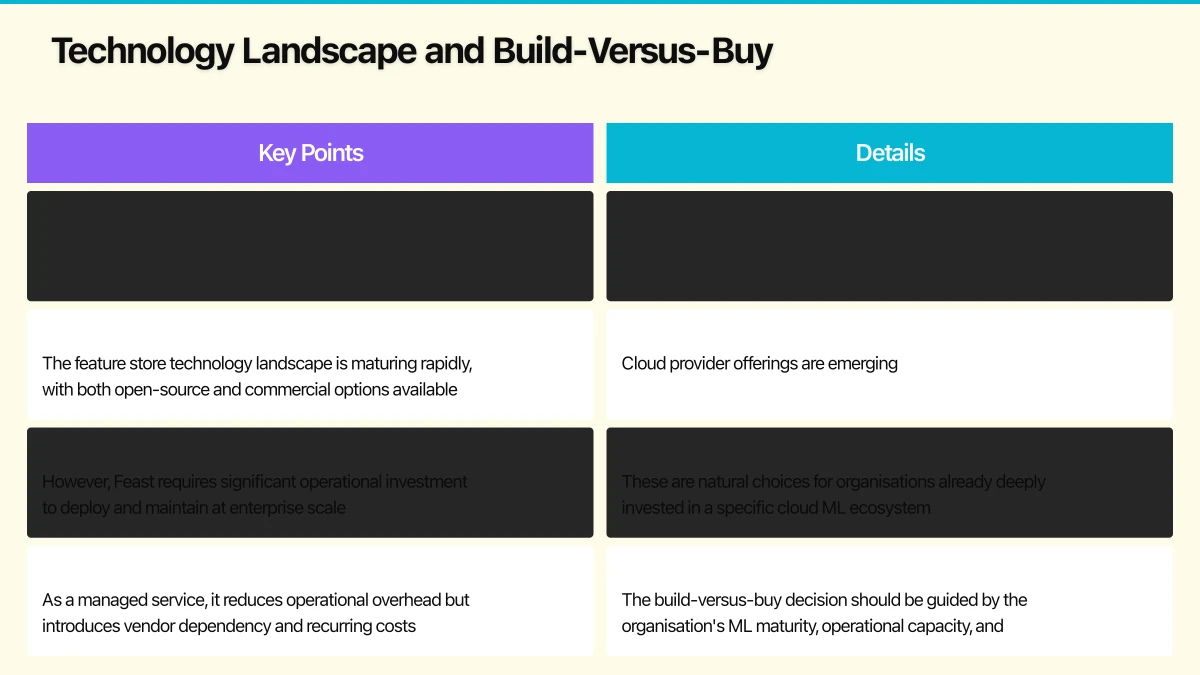

The feature store technology landscape is maturing rapidly, with both open-source and commercial options available.

Feast (Feature Store) is the leading open-source feature store, providing feature definition, offline and online storage integration, and feature serving capabilities. Feast is designed to integrate with existing data infrastructure rather than replace it, making it a pragmatic choice for enterprises that want to add feature store capabilities to their existing data stack. However, Feast requires significant operational investment to deploy and maintain at enterprise scale.

Tecton, founded by the creators of Uber’s Michelangelo feature store, offers a managed feature platform with strong support for real-time features and enterprise governance. As a managed service, it reduces operational overhead but introduces vendor dependency and recurring costs.

Cloud provider offerings are emerging. AWS SageMaker Feature Store, Google Vertex AI Feature Store, and Databricks Feature Store provide feature store capabilities integrated with their respective ML platforms. These are natural choices for organisations already deeply invested in a specific cloud ML ecosystem.

The build-versus-buy decision should be guided by the organisation’s ML maturity, operational capacity, and specific requirements. Organisations with strong data engineering teams and specific requirements that existing platforms do not meet may benefit from building on open-source foundations. Organisations prioritising time-to-value and operational simplicity should evaluate managed services. In all cases, the feature store should integrate with the existing data infrastructure rather than requiring a wholesale replacement of the data stack.

Organisational Adoption Strategy

Successfully implementing a feature store requires more than deploying technology. It requires organisational change in how data scientists, data engineers, and ML engineers collaborate.

The feature store should be positioned as a shared asset maintained by a platform team (typically within data engineering or ML platform engineering) and consumed by data science teams. Data engineers are responsible for defining and maintaining high-quality, well-documented features. Data scientists discover and consume features through the feature store’s catalogue and API, focusing their effort on model development rather than feature pipeline construction.

Adoption typically begins with a pilot project that demonstrates the value of the feature store approach. Select a use case with well-understood features that multiple models could benefit from. Build the initial feature set, demonstrate feature reuse across models, and quantify the time savings and consistency improvements.

Feature discovery and documentation are critical adoption enablers. A feature catalogue that allows data scientists to search for, understand, and evaluate existing features before building new ones is what transforms the feature store from a storage system into a productivity multiplier. Each feature should be documented with its business definition, computation logic, data sources, quality metrics, and usage history.

Governance processes should be integrated from the start. Feature definitions should undergo review before deployment, similar to code review. Feature quality metrics should be monitored in production. Feature lineage should be tracked to enable impact analysis when data sources or computation logic changes. These governance practices are essential for maintaining trust in shared features and for regulatory compliance in industries where model inputs must be auditable.

The Strategic Value Proposition

The feature store’s strategic value extends beyond operational efficiency. By creating a shared, governed feature asset, the enterprise accelerates the time from ML concept to production deployment. By eliminating training-serving skew, it improves model reliability and reduces the debugging burden on ML engineers. By providing feature lineage and governance, it supports the regulatory and ethical oversight that enterprise AI systems require.

For CTOs managing growing ML programmes, the feature store is infrastructure that enables every other ML initiative on the roadmap. The investment is justified as soon as the organisation operates more than a handful of production models, and the returns increase as the ML programme scales.