Enterprise Container Strategy: Kubernetes, Docker, and the Path to Production Scale

The Container Imperative for Enterprise

Container adoption has crossed from early-adopter territory into enterprise mainstream. According to the Cloud Native Computing Foundation’s 2024 Annual Survey, 96% of organizations are now using or evaluating Kubernetes, up from 83% in 2020. For technology leaders, the question is no longer whether to containerize, but how to do so in ways that deliver genuine business value without introducing operational complexity that negates the benefits.

The promise is compelling: consistent deployment environments, improved resource utilization, faster scaling, and portability across cloud providers. The reality is more nuanced. Container orchestration at enterprise scale requires deliberate architectural decisions, organizational changes, and operational maturity that many organizations underestimate.

This analysis provides strategic guidance for CTOs navigating container strategy decisions in 2025, drawing on implementation patterns from enterprises that have successfully made this transition—and lessons from those that struggled.

Understanding the Container Landscape in 2025

The Docker Foundation

Docker remains the de facto standard for container packaging. Its contribution to the industry extends beyond the runtime to establishing container image formats now standardized as OCI (Open Container Initiative) specifications. For development teams, Docker provides the familiar docker build and docker run workflow that makes containers accessible.

However, the runtime landscape has evolved. Docker Engine is no longer the only—or even the primary—container runtime in production Kubernetes environments. containerd, originally developed as part of Docker and now a graduated CNCF project, has become the preferred runtime for managed Kubernetes services. AWS EKS, Google GKE, and Azure AKS all default to containerd.

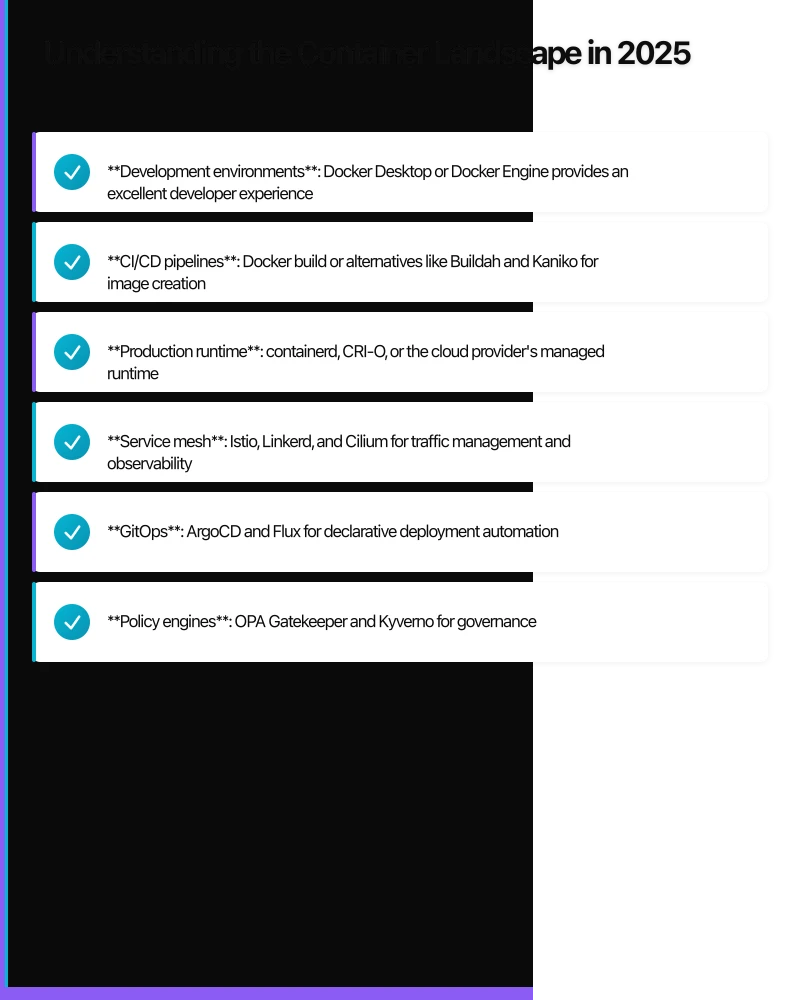

For enterprise architecture decisions, this distinction matters:

- Development environments: Docker Desktop or Docker Engine provides an excellent developer experience

- CI/CD pipelines: Docker build or alternatives like Buildah and Kaniko for image creation

- Production runtime: containerd, CRI-O, or the cloud provider’s managed runtime

Kubernetes: The Operating System for Cloud

Kubernetes has emerged as the orchestration layer that abstracts away infrastructure differences. Version 1.30, released in April 2025, continues the project’s focus on stability and security while adding capabilities around multi-cluster federation and improved Windows container support.

The Kubernetes ecosystem now includes:

- Service mesh: Istio, Linkerd, and Cilium for traffic management and observability

- GitOps: ArgoCD and Flux for declarative deployment automation

- Policy engines: OPA Gatekeeper and Kyverno for governance

- Secrets management: External Secrets Operator, HashiCorp Vault integration

- Observability: Prometheus, Grafana, Jaeger for metrics, dashboards, and tracing

For CTOs, Kubernetes represents both an opportunity and a commitment. The platform’s flexibility enables sophisticated deployment patterns, but that flexibility comes with complexity that requires dedicated platform engineering capabilities.

Strategic Platform Decisions

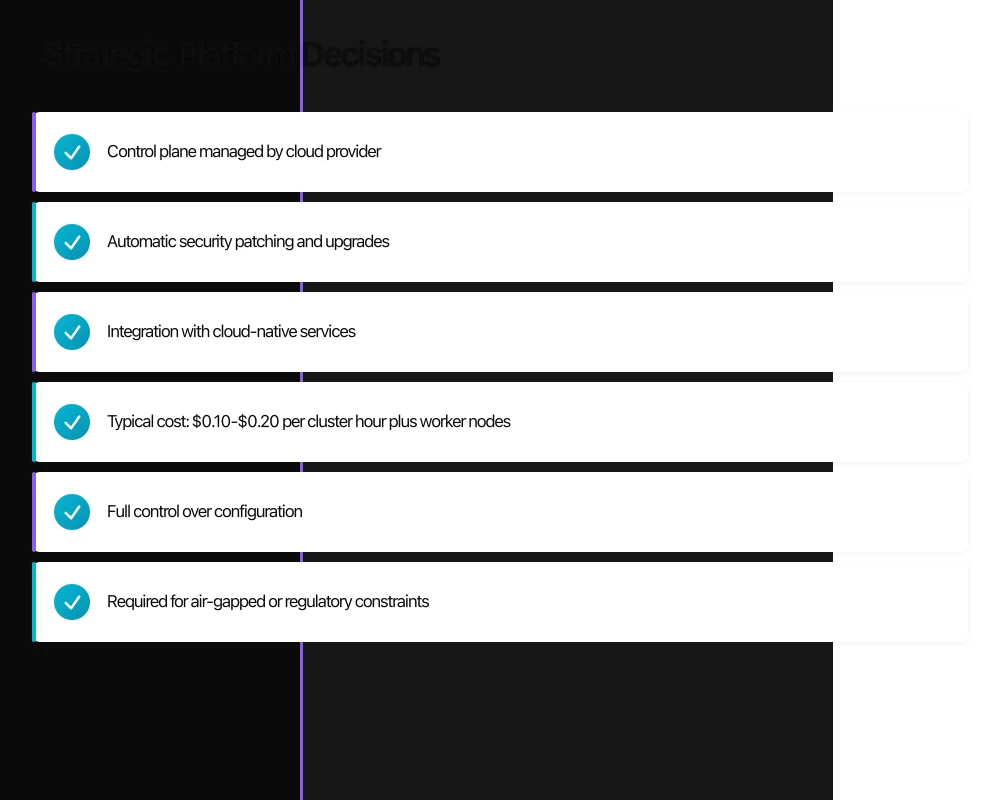

Build vs. Buy: The Managed Kubernetes Question

The most consequential container strategy decision is whether to operate self-managed Kubernetes or adopt a managed service. The calculus has shifted decisively toward managed services for most enterprises.

Managed Kubernetes Services (EKS, GKE, AKS):

- Control plane managed by cloud provider

- Automatic security patching and upgrades

- Integration with cloud-native services

- Typical cost: $0.10-$0.20 per cluster hour plus worker nodes

Self-Managed Kubernetes (kubeadm, Rancher, OpenShift):

- Full control over configuration

- Required for air-gapped or regulatory constraints

- Significant operational overhead

- Requires dedicated platform team (typically 3-5 engineers minimum)

Gartner’s 2024 analysis indicates that 85% of enterprises now use managed Kubernetes services, up from 70% in 2022. The operational burden of self-managed Kubernetes—security patches, etcd backup, control plane high availability—consumes engineering capacity better spent on application development.

Recommendation: Unless regulatory requirements mandate self-managed infrastructure, start with managed Kubernetes. The three major cloud providers have achieved rough feature parity, making cloud selection primarily a function of existing relationships and complementary services.

Multi-Cloud Kubernetes: Strategy vs. Reality

Multi-cloud Kubernetes is frequently discussed in vendor presentations but rarely implemented successfully. The theoretical benefits—avoiding lock-in, optimizing costs, improving resilience—face practical challenges:

Technical complexity: Each cloud provider’s managed Kubernetes has unique networking, storage, and IAM integrations. Abstracting these differences requires significant engineering investment in platform tooling.

Operational overhead: Running production clusters across multiple clouds requires expertise in each platform’s operational model, doubling or tripling the knowledge requirements.

Actual portability: Applications depend on cloud-native services (databases, queues, storage) that differ across providers. Container portability doesn’t translate to application portability without significant abstraction layers.

A more pragmatic approach: standardize on one primary cloud provider while using Kubernetes as the abstraction layer that enables future portability if business requirements change. This captures 80% of the flexibility benefit with 20% of the complexity.

Architecture Patterns for Production

The Platform Engineering Model

Successful enterprise container adoption requires treating Kubernetes as an internal platform with dedicated engineering support. This platform team:

- Provisions and maintains cluster infrastructure

- Establishes security baselines and policies

- Provides self-service capabilities for development teams

- Manages shared services (ingress, observability, secrets)

- Creates golden paths for common deployment patterns

The alternative—expecting each development team to become Kubernetes experts—leads to inconsistent implementations, security gaps, and frustrated developers.

According to Puppet’s 2024 State of DevOps Report, organizations with dedicated platform teams deploy 3x more frequently and experience 4x lower change failure rates than those without.

Namespace Strategy and Multi-Tenancy

Enterprise Kubernetes clusters typically serve multiple teams and applications. Namespace strategy determines isolation boundaries:

Single-tenant clusters: One cluster per team or application

- Simplest isolation model

- Higher infrastructure costs

- Best for strict compliance requirements

Multi-tenant namespaces: Multiple teams sharing clusters

- Resource efficiency through bin-packing

- Requires robust RBAC and network policies

- Appropriate for most enterprise scenarios

Hierarchical namespaces: Organizational hierarchy reflected in namespace structure

- Supports delegation of resource management

- Kubernetes 1.29+ includes native hierarchical namespace support

For most enterprises, multi-tenant clusters with namespace isolation provide the right balance. Implement:

- NetworkPolicies to control pod-to-pod communication

- ResourceQuotas to prevent runaway consumption

- RBAC limiting team access to their namespaces

- PodSecurityAdmission or OPA policies for runtime constraints

Stateful Workloads: The Final Frontier

Containers excel at stateless workloads. Stateful applications—databases, message queues, file systems—present additional challenges:

Persistent storage options:

- Cloud provider block storage (EBS, Persistent Disk, Azure Disk)

- Container Storage Interface (CSI) drivers for third-party storage

- Cloud provider file storage for shared access (EFS, Filestore, Azure Files)

Database considerations:

- Managed cloud databases often preferable to containerized databases

- If containerizing databases: use StatefulSets, implement proper backup strategies

- Consider operators (CloudNativePG, Zalando Postgres Operator) for PostgreSQL

- Redis, MongoDB, and Kafka all have mature Kubernetes operators

Strategic guidance: Start with stateless workloads. Graduate to stateful only after operational maturity is established. Prefer managed database services where available.

Security in Container Environments

The Shared Responsibility Model

Container security spans multiple layers, each with distinct ownership:

Image security (Development teams):

- Base image selection and maintenance

- Vulnerability scanning (Trivy, Snyk, Prisma Cloud)

- Minimizing image contents

- No secrets in images

Runtime security (Platform team):

- Pod security standards

- Network policies

- Secrets management

- Runtime threat detection (Falco, Sysdig)

Infrastructure security (Platform/Cloud team):

- Node hardening

- Control plane security

- Cluster networking

- IAM integration

Supply Chain Security

The 2024 XZ Utils backdoor discovery reinforced the importance of software supply chain security. For containers, this means:

Image provenance: Verify image signatures using Sigstore/Cosign. Only deploy images from trusted registries.

Base image governance: Maintain a curated catalog of approved base images. Automate scanning and rebuilds when vulnerabilities are discovered.

Dependency management: Implement software bill of materials (SBOM) generation. Track CVEs in application dependencies, not just OS packages.

Admission control: Use OPA Gatekeeper or Kyverno to enforce policies at deployment time—image sources, resource limits, security contexts.

The NIST Secure Software Development Framework (SSDF) and SLSA (Supply-chain Levels for Software Artifacts) provide structured approaches to supply chain security that map well to container workflows.

Migration Strategies

The Strangler Fig Pattern

Most enterprises aren’t greenfield. Containerization typically involves migrating existing applications. The strangler fig pattern—incrementally replacing monolith functionality with containerized services—reduces risk while delivering incremental value.

Phase 1: Lift and shift

- Containerize applications with minimal changes

- Establish operational baselines

- Build platform team capabilities

Phase 2: Re-platform

- Adopt cloud-native patterns (12-factor)

- Implement proper health checks, graceful shutdown

- Externalize configuration

Phase 3: Re-architect

- Decompose into microservices where beneficial

- Implement service mesh for traffic management

- Full cloud-native operations

Not every application warrants full re-architecture. The decision framework:

- High change frequency + high business value: Full cloud-native

- Low change frequency + high business value: Containerize, minimal changes

- Low business value: Consider retirement before investing in containerization

Application Assessment Framework

Before containerizing, evaluate each application:

Technical readiness:

- Can the application run on Linux containers? (Windows containers add complexity)

- Does it require local disk state?

- What are the scaling characteristics?

- Are there licensing implications?

Organizational readiness:

- Does the team have container experience?

- Are CI/CD pipelines container-aware?

- Is there operational runbook documentation?

Business case:

- What problem does containerization solve?

- What are the expected benefits (cost, agility, resilience)?

- What is the migration cost?

Prioritize applications where containerization solves a specific problem—scaling challenges, deployment friction, environment inconsistencies—rather than containerizing for its own sake.

Organizational Considerations

Skills and Team Structure

Container adoption is as much an organizational transformation as a technical one. Required capabilities:

Platform engineering:

- Kubernetes administration

- Infrastructure as Code (Terraform, Pulumi)

- GitOps and deployment automation

- Observability stack management

Development teams:

- Container packaging and optimization

- Cloud-native application patterns

- Troubleshooting in distributed systems

Security:

- Container threat models

- Supply chain security

- Runtime security monitoring

Training investments should precede major container initiatives. The CNCF’s Certified Kubernetes Administrator (CKA) and Certified Kubernetes Application Developer (CKAD) provide structured learning paths.

Cost Management

Container environments can reduce infrastructure costs through improved utilization, but this requires active management:

Right-sizing: Use Vertical Pod Autoscaler recommendations to identify over-provisioned workloads.

Spot/preemptible instances: Kubernetes handles node replacement gracefully, making spot instances viable for many workloads.

Bin-packing efficiency: Multi-tenant clusters improve utilization by packing workloads onto fewer nodes.

Development environments: Use namespace quotas to limit development cluster costs. Consider virtual clusters (vcluster) for isolation without dedicated infrastructure.

Monitor cluster efficiency metrics:

- Node CPU/memory utilization

- Pod-to-node ratios

- Unused persistent volumes

- Idle namespace resources

Looking Forward: The 2025 Trajectory

Several trends are shaping the container landscape this year:

WebAssembly (Wasm) workloads: Kubernetes 1.30’s improved Wasm support enables lightweight, fast-starting workloads alongside traditional containers. The SpinKube project is maturing rapidly.

AI/ML workloads: GPU scheduling, high-bandwidth networking, and large model serving are driving Kubernetes enhancements. The Kubeflow and KServe projects provide ML-specific orchestration.

Edge Kubernetes: K3s, KubeEdge, and cloud provider edge offerings enable container orchestration beyond the data center.

eBPF-based networking: Cilium’s eBPF approach is replacing traditional CNI plugins, offering improved performance and observability.

Strategic Recommendations

For CTOs developing container strategy in 2025:

-

Default to managed Kubernetes unless regulatory constraints require otherwise. The operational overhead of self-managed clusters rarely justifies the control benefits.

-

Invest in platform engineering. A dedicated team providing self-service capabilities to development teams is essential for successful adoption at scale.

-

Start with stateless workloads. Build operational maturity before tackling stateful applications.

-

Prioritize security from day one. Implement supply chain security, runtime policies, and network segmentation as foundational capabilities, not afterthoughts.

-

Measure business outcomes, not just technical metrics. Container adoption should improve deployment frequency, reduce incident recovery time, or lower infrastructure costs—track these explicitly.

-

Avoid premature optimization. Multi-cloud, service mesh, and advanced patterns add value in specific contexts but introduce complexity that isn’t warranted for every workload.

The organizations succeeding with containers treat them as an enabler for business objectives—faster time to market, improved resilience, operational efficiency—rather than as a goal in themselves. Technical excellence in container orchestration matters, but only in service of delivering value to the business.

For strategic guidance on enterprise container adoption and cloud-native transformation, connect with me to discuss your organization’s specific context and objectives.