Service Mesh Architecture: Enterprise Implementation Strategy for Microservices at Scale

Introduction

As enterprise microservices architectures mature beyond dozens into hundreds or thousands of services, a fundamental challenge emerges: how do you manage the exponential complexity of service-to-service communication? Traffic management, security policies, observability, and reliability patterns that were manageable at small scale become operational nightmares without systematic approaches.

Service mesh architecture addresses this challenge by extracting networking concerns from application code into dedicated infrastructure. The promise is compelling: consistent security, observability, and traffic management across all services without requiring each development team to implement these capabilities independently.

Yet service mesh adoption remains fraught with complexity. Implementation failures are common. Performance overhead concerns persist. The operational burden can exceed the benefits for organisations unprepared for this infrastructure shift.

This guide provides the strategic framework enterprise CTOs need to evaluate, implement, and operate service mesh architecture successfully.

Understanding Service Mesh Architecture

The Core Abstraction

A service mesh consists of two primary components:

Data Plane: Lightweight proxy sidecars deployed alongside each service instance. These proxies intercept all network traffic, enabling policy enforcement, telemetry collection, and traffic manipulation without application code changes. Envoy proxy dominates this layer, powering Istio, AWS App Mesh, and numerous other implementations.

Control Plane: Centralised management layer that configures proxy behaviour across the mesh. The control plane translates high-level policies into proxy configurations, manages service discovery, and aggregates telemetry data.

This architecture inverts the traditional networking model. Rather than applications reaching out through shared infrastructure, the infrastructure wraps around each application instance, creating a uniform networking layer regardless of underlying deployment topology.

Capabilities Delivered

Service mesh provides several capability categories:

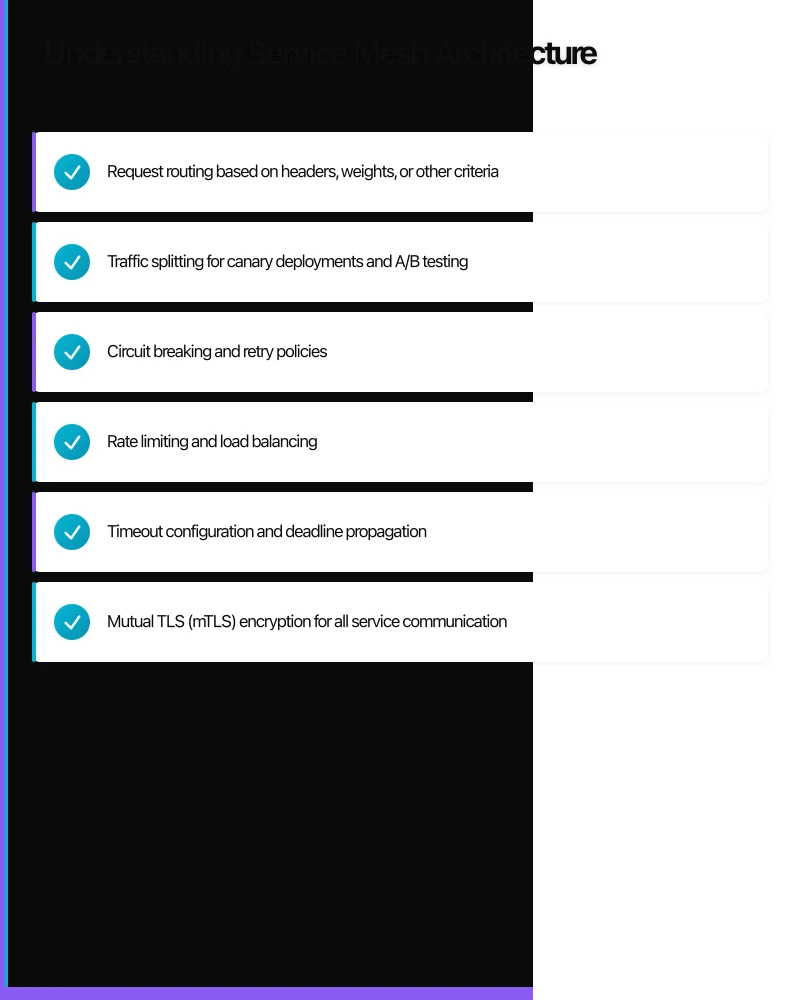

Traffic Management

- Request routing based on headers, weights, or other criteria

- Traffic splitting for canary deployments and A/B testing

- Circuit breaking and retry policies

- Rate limiting and load balancing

- Timeout configuration and deadline propagation

Security

- Mutual TLS (mTLS) encryption for all service communication

- Service identity and authentication

- Fine-grained authorisation policies

- Certificate management and rotation

- Network policy enforcement

Observability

- Distributed tracing without application instrumentation

- Standardised metrics across all services

- Access logging and audit trails

- Service topology visualisation

- Real-time traffic flow analysis

Reliability

- Health checking and outlier detection

- Automatic failover and retry logic

- Fault injection for chaos engineering

- Locality-aware load balancing

The Enterprise Service Mesh Landscape

Istio: The Feature-Complete Option

Istio remains the most widely adopted service mesh for enterprise deployments. Its comprehensive feature set addresses virtually every service mesh use case, backed by Google, IBM, and a large contributor community.

Strengths:

- Complete feature coverage for traffic, security, and observability

- Strong integration with Kubernetes ecosystem

- Extensive documentation and community knowledge

- Native support in Google Cloud via Anthos Service Mesh

- Advanced traffic management capabilities

Considerations:

- Significant resource overhead (memory and CPU for sidecars and control plane)

- Steep learning curve for operators

- Configuration complexity can overwhelm teams

- Upgrade processes require careful planning

Istio suits enterprises with dedicated platform teams, complex traffic management requirements, and tolerance for operational overhead in exchange for capability depth.

Linkerd: The Lightweight Alternative

Linkerd, now maintained by Buoyant, positions itself as the simple, lightweight alternative to Istio. Its design philosophy prioritises operational simplicity over feature exhaustiveness.

Strengths:

- Minimal resource footprint (10-20 MB per sidecar)

- Simpler operational model with fewer configuration options

- Faster adoption curve for teams

- Strong security focus with automatic mTLS

- Graduated CNCF project with proven production deployments

Considerations:

- Fewer advanced traffic management features

- Smaller ecosystem of integrations

- Less flexibility for complex routing scenarios

- Limited support outside Kubernetes

Linkerd suits enterprises prioritising simplicity, resource efficiency, and rapid adoption over advanced traffic manipulation capabilities.

Consul Connect: The Multi-Platform Option

HashiCorp Consul Connect extends Consul’s service discovery with mesh networking capabilities. Its architecture supports both Kubernetes and traditional VM deployments.

Strengths:

- Multi-platform support (Kubernetes, VMs, containers)

- Integration with HashiCorp ecosystem (Vault, Terraform, Nomad)

- Proven service discovery heritage

- Flexible deployment topologies

- Strong secrets management via Vault integration

Considerations:

- HashiCorp licensing changes require evaluation

- Different operational model than Kubernetes-native meshes

- Observability features less mature than Istio

- Smaller community focused specifically on mesh capabilities

Consul Connect suits enterprises with heterogeneous infrastructure spanning Kubernetes and traditional deployments, particularly those already invested in HashiCorp tooling.

AWS App Mesh and Cloud Provider Options

Major cloud providers offer managed service mesh options:

AWS App Mesh: Envoy-based mesh integrated with AWS services. Suits AWS-centric deployments seeking reduced operational burden.

Google Cloud Anthos Service Mesh: Managed Istio deployment for Google Cloud and hybrid environments. Provides enterprise support and simplified operations.

Azure Open Service Mesh: Lightweight, Envoy-based mesh for Azure Kubernetes Service.

Managed options trade flexibility for operational simplicity. Evaluate whether your requirements fit within managed service constraints.

Strategic Decision Framework

When Service Mesh Adds Value

Service mesh investment makes sense when:

Scale Demands Consistency: Beyond 50-100 services, manually implementing security, observability, and reliability patterns in each service becomes unsustainable. Service mesh provides consistency without duplicated effort.

Zero-Trust Security Requirements: Regulatory or security requirements mandate encrypted, authenticated, and authorised service communication. mTLS across hundreds of services is impractical without mesh automation.

Advanced Traffic Management Needs: Complex deployment patterns (canary releases, traffic mirroring, header-based routing) across numerous services require traffic management capabilities that application load balancers cannot provide.

Observability Gaps Exist: Understanding request flows across distributed systems requires distributed tracing and consistent metrics. Service mesh provides this without instrumenting each application.

Multi-Cluster or Hybrid Architectures: Service communication spanning multiple Kubernetes clusters or bridging cloud and on-premises deployments benefits from mesh abstraction.

When Service Mesh Adds Complexity Without Value

Avoid service mesh when:

Scale Doesn’t Justify Overhead: Fewer than 20-30 services rarely justify service mesh complexity. Simpler approaches (API gateways, application-level libraries) provide adequate capability.

Teams Lack Platform Engineering Capability: Service mesh requires dedicated operational expertise. Organisations without platform teams to own mesh operations struggle with adoption.

Latency Requirements Are Extreme: Sidecar proxy hops add microseconds to milliseconds of latency. Ultra-low-latency applications may find this overhead unacceptable.

Budget Constraints Exist: Sidecar proxies consume memory and CPU for every service instance. At scale, resource costs add meaningfully to infrastructure spend.

Application Architectures Don’t Align: Monolithic applications or those not deployed on container orchestration platforms gain limited benefit from service mesh.

Implementation Strategy

Phase 1: Foundation Building (Months 1-2)

Team Capability Development

Service mesh success requires platform engineering capability. Before implementation:

- Identify or hire team members with service mesh experience

- Invest in training for Kubernetes networking, Envoy proxy, and chosen mesh platform

- Establish relationships with vendor support or consulting partners

- Create sandbox environments for experimentation

Platform Selection

Evaluate mesh options against specific requirements:

- Deploy each candidate mesh in development environments

- Test core use cases (mTLS, traffic splitting, observability)

- Measure resource overhead and latency impact

- Assess operational complexity for your team’s capabilities

- Evaluate integration with existing tooling

Architecture Planning

Design mesh deployment topology:

- Single-cluster vs. multi-cluster mesh configurations

- Ingress gateway placement and configuration

- Observability stack integration (Prometheus, Jaeger, Grafana)

- Certificate authority selection and management

Phase 2: Non-Production Deployment (Months 3-4)

Development Environment Rollout

Deploy mesh infrastructure to development environments first:

- Install control plane components

- Configure default policies (mTLS mode, traffic management defaults)

- Integrate with CI/CD pipelines for sidecar injection

- Establish monitoring and alerting

Application Onboarding Process

Develop standardised onboarding procedures:

- Sidecar injection configuration (automatic vs. manual)

- Service account and identity configuration

- Health check and readiness probe adjustments

- Resource request/limit tuning

Observability Integration

Connect mesh telemetry to observability platforms:

- Configure Prometheus scraping for mesh metrics

- Deploy distributed tracing (Jaeger, Zipkin, or commercial alternatives)

- Build Grafana dashboards for mesh health

- Establish alerting thresholds

Phase 3: Staging and Pre-Production (Months 5-6)

Staging Environment Deployment

Extend mesh to staging environments with production-like configuration:

- Enable stricter security policies (strict mTLS, authorisation policies)

- Configure production-grade observability

- Test failure scenarios and recovery procedures

- Validate performance under load

Operations Playbook Development

Document operational procedures:

- Mesh upgrade procedures and rollback plans

- Troubleshooting guides for common issues

- Incident response procedures for mesh failures

- Performance tuning guidelines

Security Policy Definition

Develop security policies before production:

- Service-to-service authorisation rules

- Ingress and egress policies

- Certificate rotation schedules

- Audit logging requirements

Phase 4: Production Rollout (Months 7-9)

Gradual Service Onboarding

Onboard production services incrementally:

- Start with non-critical services to build operational confidence

- Expand to services with clear mesh benefits (complex traffic management needs)

- Monitor resource utilisation and latency impact

- Address issues before expanding further

Traffic Management Migration

Transition traffic management from existing solutions:

- Migrate ingress traffic through mesh gateways

- Implement traffic policies (retries, timeouts, circuit breaking)

- Configure advanced routing for deployment patterns

- Validate behaviour under failure conditions

Security Hardening

Enable production security features:

- Enforce strict mTLS across all services

- Implement authorisation policies

- Configure certificate rotation

- Enable audit logging

Phase 5: Optimisation and Expansion (Ongoing)

Performance Tuning

Optimise mesh performance based on production data:

- Right-size sidecar resource allocations

- Tune connection pooling and timeout configurations

- Optimise control plane resource utilisation

- Evaluate and implement performance improvements from mesh updates

Feature Expansion

Extend mesh capabilities:

- Implement advanced traffic patterns (traffic mirroring, fault injection)

- Expand multi-cluster mesh connectivity

- Integrate with external services via egress gateways

- Adopt new mesh features as they mature

Operational Considerations

Resource Planning

Service mesh adds infrastructure overhead. Plan for:

Sidecar Resources

- Memory: 50-150 MB per sidecar (varies by mesh and configuration)

- CPU: 0.1-0.5 cores per sidecar under load

- Multiply by total pod count for aggregate impact

Control Plane Resources

- Istiod or equivalent: 1-4 GB memory, 2-4 CPU cores minimum

- Scale with service count and policy complexity

- Plan for high availability deployment

Observability Infrastructure

- Prometheus storage for mesh metrics (significant volume)

- Tracing infrastructure storage and processing

- Log aggregation capacity

Upgrade Strategy

Service mesh upgrades require careful planning:

Canary Upgrades: Deploy new mesh versions alongside existing. Migrate services gradually.

Testing Requirements: Validate upgrades thoroughly in non-production before production deployment.

Rollback Capability: Maintain ability to revert to previous versions. Test rollback procedures.

Version Compatibility: Ensure compatibility between control plane and data plane versions during upgrades.

Troubleshooting Patterns

Common service mesh issues and resolution approaches:

Connection Failures: Check mTLS certificate validity, service account configuration, and authorisation policies. Mesh proxies log connection details.

Latency Increases: Examine sidecar resource utilisation, connection pooling configuration, and traffic routing. Distributed tracing identifies bottlenecks.

Configuration Propagation: Control plane to data plane propagation can lag. Verify configuration status in proxies, not just control plane.

Resource Exhaustion: Sidecar proxies can exhaust connections or memory under load. Monitor proxy metrics and adjust resource allocations.

Measuring Success

Technical Metrics

Reliability

- Service-to-service error rates

- Retry and circuit breaker activation frequency

- Timeout and deadline compliance

- Failover success rates

Performance

- Latency overhead (mesh vs. direct communication)

- Sidecar resource utilisation

- Control plane latency for configuration updates

Security

- mTLS coverage percentage

- Certificate rotation success rates

- Authorisation policy enforcement

- Security incident detection

Operational Metrics

Adoption

- Services onboarded to mesh

- Traffic percentage flowing through mesh

- Team satisfaction with mesh operations

Efficiency

- Time to onboard new services

- Time to implement traffic policies

- Incident resolution time for mesh-related issues

Conclusion

Service mesh architecture offers compelling capabilities for enterprises operating microservices at scale. The consistent handling of security, observability, and traffic management across hundreds of services addresses real complexity that alternatives struggle to match.

Yet service mesh is not a universal solution. The operational overhead, resource costs, and implementation complexity require serious consideration. Organisations without dedicated platform engineering teams, sufficient scale, or clear capability requirements may find simpler approaches more appropriate.

For enterprises where service mesh fits, success requires:

- Honest assessment of scale, requirements, and team capabilities

- Careful platform selection aligned with specific needs

- Phased implementation building operational confidence

- Investment in team training and operational procedures

- Continuous optimisation based on production experience

The service mesh landscape continues evolving. Sidecar-less architectures using eBPF show promise for reducing overhead. Cloud providers expand managed offerings. Open source projects mature.

The fundamentals, however, remain constant: service mesh succeeds when it solves real problems for organisations prepared to operate it. Start with the problem. Validate the fit. Implement methodically.

Sources

- Istio. (2025). Istio Documentation. Istio Project. https://istio.io/latest/docs/

- Linkerd. (2025). Linkerd Documentation. Buoyant. https://linkerd.io/2.15/overview/

- HashiCorp. (2025). Consul Connect Service Mesh. HashiCorp. https://www.consul.io/docs/connect

- CNCF. (2025). Service Mesh Interface Specification. Cloud Native Computing Foundation. https://smi-spec.io/

- Burns, B., Grant, B., Oppenheimer, D., Brewer, E., & Wilkes, J. (2016). Borg, Omega, and Kubernetes. ACM Queue. https://queue.acm.org/detail.cfm?id=2898444

- Envoy Proxy. (2025). Envoy Documentation. Envoy Project. https://www.envoyproxy.io/docs/envoy/latest/

Strategic guidance for enterprise technology leaders navigating infrastructure transformation.