Cloud-Native Networking: Enterprise Service Mesh and Beyond

Cloud-native architectures fundamentally change networking requirements. Microservices create exponentially more service-to-service connections than monolithic applications. Containerized workloads appear and disappear dynamically. Multi-cloud and hybrid deployments span network boundaries. Traditional enterprise networking approaches designed for stable, long-lived connections between known endpoints cannot adequately address these realities.

For enterprise CTOs, cloud-native networking represents both a technical evolution and a strategic capability. Organizations that master modern networking patterns can deploy distributed systems with confidence, implement zero-trust security effectively, and observe complex system behavior. Those relying on legacy networking approaches face mounting operational burden and security gaps.

The Cloud-Native Networking Challenge

Understanding the challenges cloud-native architectures create for networking clarifies why new approaches are necessary.

Dynamic Service Discovery

Traditional networking assumes relatively stable endpoints configured in advance. Load balancers route to known server addresses. Firewalls allow traffic between defined IP ranges. DNS provides stable name-to-address mapping.

Cloud-native environments violate these assumptions:

Ephemeral Containers: Container instances appear, scale, and disappear within seconds. IP addresses cannot be managed manually or configured in advance.

Dynamic Scaling: Service instance counts change continuously based on load. Static load balancer configurations cannot keep pace.

Multi-Tenancy: Multiple applications share infrastructure, with workloads moving across nodes. Network policies must accommodate this dynamism.

Service discovery mechanisms (Kubernetes DNS, Consul, etc.) provide dynamic endpoint resolution, but consuming services still need mechanisms to connect reliably.

East-West Traffic Dominance

Traditional enterprise networking focused on north-south traffic: clients connecting to services through perimeter controls. Firewalls, load balancers, and security appliances sat at network boundaries.

Microservices invert this pattern. A single user request may trigger dozens of inter-service calls. East-west traffic between services dramatically exceeds north-south traffic from clients.

This shift has implications:

Traffic Volume: Service meshes must handle orders of magnitude more connections than traditional load balancers.

Security Model: Perimeter security is insufficient when most traffic flows within the perimeter.

Observability: Understanding system behavior requires visibility into east-west traffic patterns.

Distributed System Complexity

Microservices create distributed system challenges that networking must address:

Partial Failures: Any service may fail independently. Calling services need resilience patterns: timeouts, retries, circuit breakers.

Latency Management: Network latency between services accumulates. Efficient routing and connection management are essential.

Consistency Challenges: Distributed transactions span services. Network reliability affects data consistency.

These concerns previously lived in application code. Modern networking infrastructure can handle them consistently.

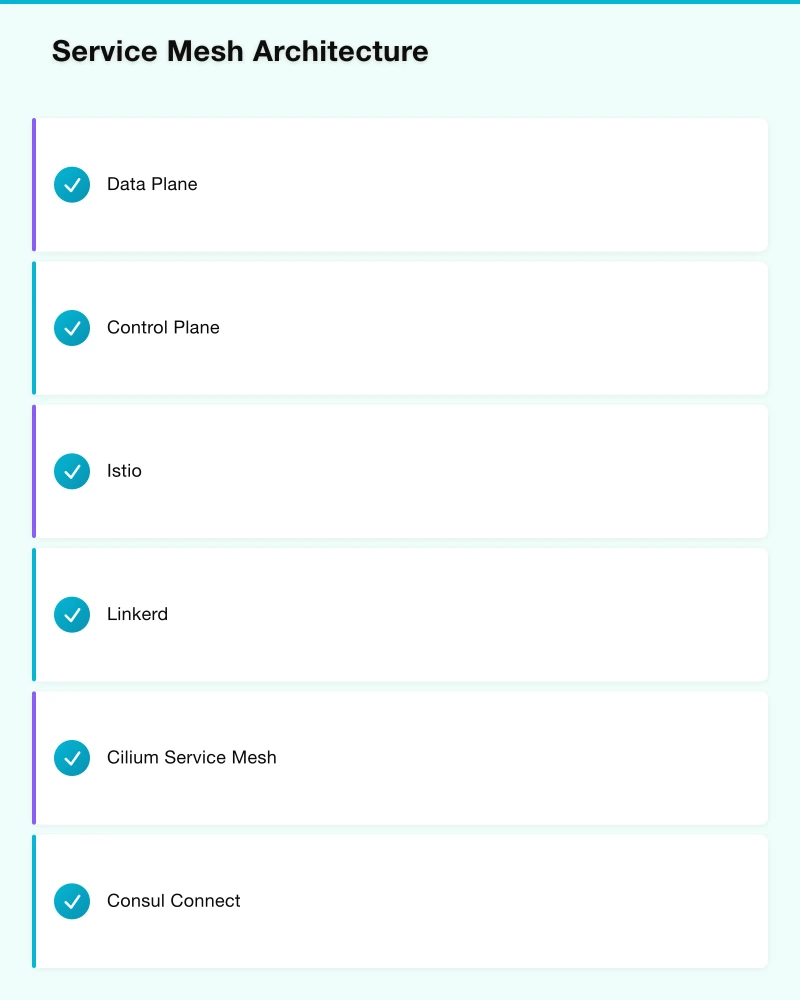

Service Mesh Architecture

Service mesh has emerged as the primary architectural pattern for cloud-native networking, providing consistent networking capabilities across diverse services.

Core Concept

A service mesh deploys proxy instances alongside every service instance (the sidecar pattern). All inbound and outbound traffic passes through the proxy, which implements networking capabilities transparently:

- Traffic Management: Routing, load balancing, traffic splitting

- Security: Mutual TLS, authorization policies

- Observability: Metrics, tracing, logging

- Resilience: Timeouts, retries, circuit breakers

Applications communicate as if talking directly to other services. The mesh handles networking complexity.

Architectural Components

Data Plane: The proxies handling actual traffic. Envoy proxy dominates this layer, providing high-performance proxying with extensive capabilities.

Control Plane: Management components configuring the data plane. Accepts high-level policy and translates to proxy configuration. Distributes configuration to proxy instances.

Service Mesh Implementations

Istio: The most widely adopted service mesh, providing comprehensive capabilities. Istio’s control plane (istiod) manages Envoy sidecars. Rich feature set but operational complexity.

Linkerd: Lightweight alternative emphasizing simplicity and performance. Purpose-built proxy (linkerd2-proxy) rather than general-purpose Envoy. Lower resource overhead.

Cilium Service Mesh: eBPF-based approach running in the Linux kernel rather than userspace sidecars. Exceptional performance with reduced operational overhead.

Consul Connect: HashiCorp’s mesh built on Consul’s service discovery. Strong multi-datacenter capabilities for hybrid environments.

AWS App Mesh, Google Traffic Director, Azure Service Fabric: Cloud provider managed meshes integrating with platform services.

Traffic Management Capabilities

Service mesh traffic management enables sophisticated deployment patterns:

Intelligent Routing: Route traffic based on headers, paths, weights, or custom criteria. Enable A/B testing by routing specific users to new versions.

Traffic Splitting: Gradually shift traffic between versions for canary deployments. Reduce blast radius of new releases.

Traffic Mirroring: Copy production traffic to test environments without affecting users. Validate new versions against real traffic patterns.

Rate Limiting: Protect services from overload by limiting request rates.

Retries and Timeouts: Configure retry policies and timeout boundaries consistently across services.

Security Capabilities

Service mesh provides foundational security capabilities:

Mutual TLS (mTLS): Automatic encryption and authentication between all services. Certificates managed automatically without application involvement.

Service Identity: Strong cryptographic identity for services based on certificates rather than network location.

Authorization Policies: Fine-grained access control specifying which services can communicate. Implement principle of least privilege for service communication.

Certificate Management: Automatic certificate provisioning, rotation, and revocation. Eliminates manual certificate management burden.

Observability Capabilities

Service mesh generates rich telemetry:

Metrics: Golden signals (latency, traffic, errors, saturation) for every service interaction. Consistent metrics without application instrumentation.

Distributed Tracing: Automatic trace propagation showing request flow across services. Integrates with Jaeger, Zipkin, and other tracing systems.

Access Logging: Detailed logs of every request including timing, response codes, and metadata.

Zero Trust Network Architecture

Service mesh enables zero trust networking principles increasingly mandated for enterprise security.

Zero Trust Principles

Zero trust assumes no implicit trust based on network location. Every access request must be verified:

Verify Explicitly: Authenticate and authorize every request based on all available data.

Least Privilege Access: Limit access to minimum required for the specific task.

Assume Breach: Design assuming adversaries have network access. Minimize blast radius.

Service Mesh Zero Trust Implementation

Network Microsegmentation: Authorization policies restrict service-to-service communication to explicitly permitted paths. Attackers compromising one service cannot freely access others.

Encrypted Communications: mTLS ensures traffic cannot be intercepted or tampered with even on compromised networks.

Service Identity Verification: Certificate-based identity prevents service impersonation. Services cannot claim to be other services without appropriate credentials.

Continuous Verification: Every request is authenticated and authorized, not just initial connection.

Beyond Network Controls

Zero trust extends beyond networking:

User Identity Integration: Connecting service mesh authorization with user identity (OAuth, OIDC) enables user-aware service policies.

Context-Aware Access: Authorization decisions incorporating device posture, location, time, and behavior patterns.

API Security: Service mesh providing foundational controls complemented by API gateways for external-facing APIs.

Multi-Cloud and Hybrid Networking

Enterprise reality involves workloads spanning multiple clouds and on-premises environments.

Cross-Environment Challenges

Network Connectivity: Establishing secure, reliable connectivity between environments. VPN, dedicated connections, or overlay networks.

Service Discovery: Making services discoverable across environment boundaries. Federated service registries.

Consistent Policy: Applying uniform security and traffic policies across heterogeneous infrastructure.

Latency and Reliability: Managing degraded network characteristics between environments compared to within a single cloud.

Multi-Cluster Service Mesh

Modern service meshes support multi-cluster deployments:

Federated Mesh: Multiple clusters joined into a unified mesh with consistent configuration.

Cross-Cluster Service Discovery: Services in one cluster reaching services in other clusters transparently.

Traffic Management Across Clusters: Routing policies spanning cluster boundaries for geographic distribution or failover.

Unified Observability: Consolidated visibility across all clusters.

Hybrid Architecture Patterns

Gateway-Based Connectivity: API gateways bridging mesh and non-mesh environments. Legacy systems accessible through gateway proxies.

Sidecar Injection for Non-Kubernetes: Mesh capabilities extended to VM-based workloads through sidecar deployment outside Kubernetes.

Progressive Adoption: Migrating workloads to mesh incrementally while maintaining connectivity with legacy networking.

Kubernetes Networking Fundamentals

Service mesh builds on Kubernetes networking primitives worth understanding.

Pod Networking

Kubernetes establishes flat pod networking where every pod receives a routable IP address. Pods communicate directly without NAT. Container Network Interface (CNI) plugins implement this networking.

CNI Options: Calico, Cilium, Flannel, AWS VPC CNI, and others provide different capabilities around performance, security, and policy.

Services and Endpoints

Kubernetes Services provide stable network endpoints for sets of pods:

ClusterIP: Internal-only virtual IP routing to backend pods.

NodePort: Exposes service on each node’s IP at a specific port.

LoadBalancer: Provisions cloud load balancer for external access.

Ingress and Gateway API

Ingress: Original Kubernetes API for external traffic routing. HTTP/HTTPS focused with limited capabilities.

Gateway API: Modern replacement providing richer traffic management. Service mesh implementations increasingly use Gateway API for unified ingress and mesh configuration.

Network Policies

Native Kubernetes Network Policies provide basic microsegmentation:

Pod Selection: Policies apply to pods matching label selectors.

Ingress/Egress Rules: Allow or deny traffic based on source/destination and ports.

Namespace Isolation: Restricting cross-namespace communication.

Network policies provide a foundation that service mesh enhances with richer capabilities.

eBPF and the Future of Cloud Networking

Extended Berkeley Packet Filter (eBPF) is transforming cloud-native networking, enabling capabilities previously impossible.

eBPF Fundamentals

eBPF allows running sandboxed programs in the Linux kernel without kernel modifications. For networking, this enables:

Kernel-Level Processing: Handling packets in the kernel rather than userspace, dramatically reducing overhead.

Programmable Datapath: Custom networking logic without kernel modifications.

Deep Observability: Visibility into kernel networking behavior impossible from userspace.

Cilium and eBPF Networking

Cilium pioneered eBPF-based Kubernetes networking, providing:

High-Performance CNI: Network connectivity with minimal overhead.

Network Policy Enforcement: Efficient policy processing in the kernel.

Service Mesh Without Sidecars: Mesh capabilities implemented in eBPF rather than sidecar proxies, reducing resource consumption and latency.

Advanced Observability: Hubble provides deep visibility into network flows.

Sidecar-Free Service Mesh

Traditional service mesh requires sidecar proxies adding resource overhead and latency. eBPF-based approaches process traffic in the kernel:

Resource Efficiency: Eliminating per-pod sidecar containers reduces cluster resource consumption.

Lower Latency: Kernel processing faster than userspace proxying.

Simplified Operations: Fewer components to manage and troubleshoot.

The tradeoff: eBPF approaches may have fewer features than mature sidecar proxies, though capability gaps are narrowing.

Implementation Considerations

Successful enterprise service mesh adoption requires addressing several considerations.

Rollout Strategy

Progressive Adoption: Enabling mesh for specific namespaces or services before organization-wide deployment. Gaining experience incrementally.

Permissive to Strict: Starting with permissive policies allowing all traffic, then progressively tightening to least privilege.

Ambient Mesh Options: Istio’s ambient mesh and Cilium’s sidecar-free approach enable gradual adoption without modifying workloads.

Performance Optimization

Service mesh introduces latency that must be managed:

Protocol Selection: gRPC and HTTP/2 connection multiplexing reduces connection overhead compared to HTTP/1.1.

Sidecar Resource Allocation: Appropriately sizing sidecar containers prevents CPU throttling.

Intelligent Load Balancing: Locality-aware routing minimizes network hops.

Connection Pooling: Reusing connections reduces establishment overhead.

Operational Considerations

Complexity Management: Service mesh adds operational complexity. Teams need training and experience.

Debugging Changes: Troubleshooting shifts from application-level to mesh-level. New tools and approaches required.

Upgrade Management: Mesh upgrades require careful planning to avoid disruption.

Multi-Tenancy: Shared mesh across teams requires governance and isolation.

Observability Integration

Service mesh observability must integrate with broader observability strategy.

Metrics Integration

Prometheus Integration: Service mesh metrics exported in Prometheus format for unified collection.

Dashboard Integration: Mesh metrics visualized alongside application metrics in Grafana or similar.

Alerting: Mesh metrics feeding into alerting systems for proactive problem detection.

Distributed Tracing

Trace Propagation: Mesh automatically propagates trace context between services.

Backend Integration: Traces exported to Jaeger, Zipkin, Tempo, or commercial APM platforms.

Application Correlation: Connecting mesh traces with application-level instrumentation.

Log Aggregation

Access Log Collection: Mesh access logs collected into centralized logging platforms.

Correlation: Correlating mesh logs with application logs through request IDs.

Governance and Policy

Enterprise mesh deployment requires governance structures.

Policy Management

Policy as Code: Mesh policies stored in version control, reviewed through pull requests.

GitOps Deployment: Policy changes deployed through GitOps workflows ensuring auditability.

Policy Testing: Validating policies before production deployment through testing frameworks.

Multi-Team Coordination

Namespace Delegation: Teams managing their own mesh policies within delegated boundaries.

Central Standards: Platform teams maintaining organization-wide standards while enabling team autonomy.

Policy Hierarchy: Organization, team, and application-level policies with appropriate inheritance.

Compliance

Audit Logging: Mesh providing comprehensive audit trails for compliance requirements.

Encryption Standards: mTLS meeting encryption requirements for regulated industries.

Access Control Evidence: Authorization policies demonstrating least privilege implementation.

Future Directions

Cloud-native networking continues evolving:

Ambient Mesh Maturation: Sidecar-free approaches becoming production-ready for broader adoption.

Gateway API Standardization: Unified configuration model across ingress, mesh, and multi-cluster scenarios.

eBPF Expansion: More networking capabilities moving to eBPF for performance and efficiency.

AI Operations: Machine learning for anomaly detection, auto-tuning, and intelligent routing decisions.

WebAssembly Extensions: Custom mesh functionality through portable WASM modules.

Strategic Recommendations

For enterprise CTOs planning cloud-native networking:

-

Start with Requirements: Define what networking capabilities are actually needed before selecting technologies.

-

Progressive Adoption: Begin with limited scope, gaining experience before broad deployment.

-

Platform Strategy: Service mesh is a platform capability requiring investment in teams, tooling, and practices.

-

Security Integration: Leverage mesh for zero trust security rather than treating it purely as traffic management.

-

Observability Priority: Invest in observability tooling to realize mesh visibility benefits.

-

Future-Proofing: Consider eBPF-based approaches for new deployments given trajectory advantages.

Conclusion

Cloud-native networking through service mesh and related technologies provides capabilities essential for operating distributed systems at enterprise scale. The complexity is real, but so are the benefits: consistent security, comprehensive observability, and sophisticated traffic management across dynamic, distributed environments.

Organizations investing in cloud-native networking capabilities will operate their distributed systems with greater confidence, security, and visibility. Those attempting to apply legacy networking approaches to cloud-native architectures will face mounting challenges as system complexity grows.

For enterprise CTOs, cloud-native networking deserves strategic attention as a foundational capability enabling the broader cloud-native transformation.

References and Further Reading

- Istio Project. (2025). “Istio Documentation.” Istio.io.

- Linkerd Project. (2025). “Linkerd Documentation.” Linkerd.io.

- Cilium Project. (2025). “Cilium Documentation.” Cilium.io.

- CNCF. (2025). “Service Mesh Landscape.” Cloud Native Computing Foundation.

- Kubernetes. (2025). “Gateway API Documentation.” Kubernetes.io.

- NIST. (2024). “Zero Trust Architecture.” NIST Special Publication 800-207.

- Google. (2024). “BeyondProd: A New Approach to Cloud-Native Security.” Google Cloud.