The Convergence of AI and IoT: Creating Truly Smart Systems

Introduction

In October 2024, John Deere deployed its ExactShot precision planting system across 340 commercial farms spanning 1.2 million acres, combining computer vision AI with IoT sensor networks to optimize seed and fertilizer placement for individual plants rather than broadcasting uniformly across fields. The AIoT system uses cameras mounted on planting equipment to identify optimal seed placement locations in real-time (analyzing soil conditions, moisture levels, and existing crop residue), then precisely dispenses seeds and starter fertilizer only where conditions support growth—reducing seed usage by 60% and fertilizer application by 40% while maintaining or improving yields. Each planter processes data from 12 IoT sensors (soil moisture, compaction, temperature, organic matter content) plus computer vision analyzing 45 frames per second, making 340,000 micro-decisions per hour without cloud connectivity through edge AI inference running on embedded GPUs. The system generated $47 million in input cost savings for participating farms during the 2024 growing season while reducing environmental impact from over-fertilization (nitrogen runoff declined 37% measured by watershed monitoring). This deployment exemplifies the transformative potential of AIoT—the convergence of artificial intelligence and Internet of Things—where billions of connected sensors generate data that AI analyzes in real-time to enable autonomous decision-making, predictive maintenance, and intelligent automation across industries from agriculture to manufacturing to smart cities. As IoT deployments scale from millions to billions of devices and edge AI capabilities mature, AIoT is creating genuinely intelligent systems that sense, reason, and act autonomously.

From Data Collection to Intelligent Action: The AIoT Architecture

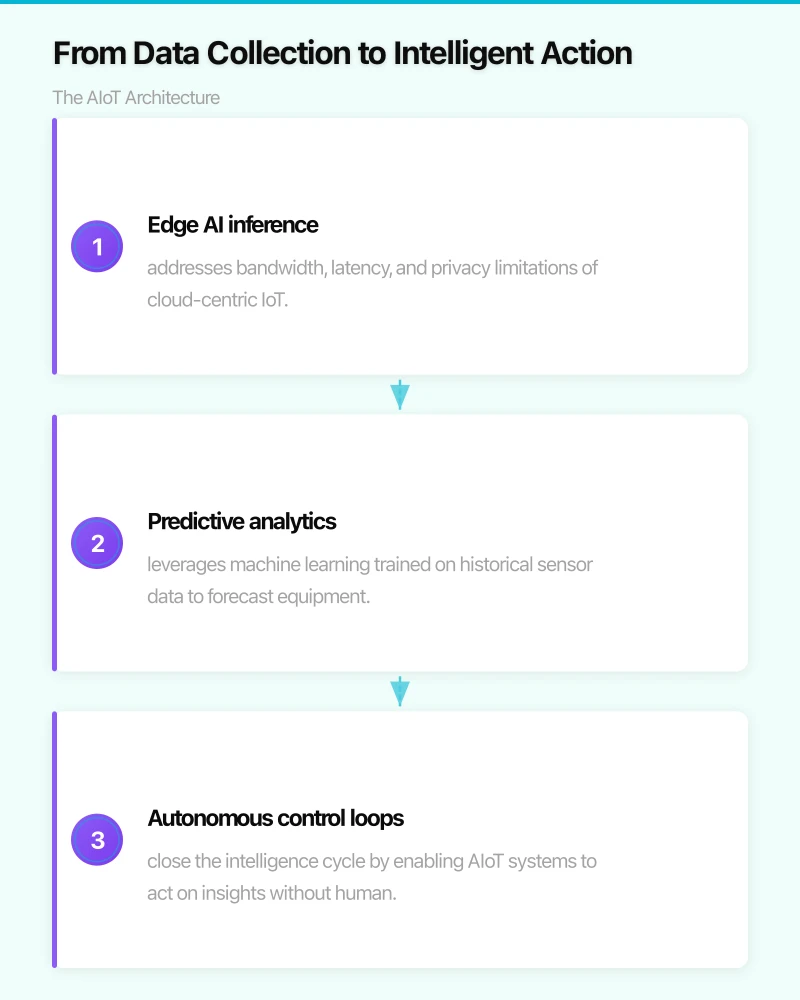

Traditional IoT systems follow a simple paradigm: sensors collect data, transmit to cloud servers for storage and analysis, then humans review dashboards and make decisions based on insights—a human-in-the-loop model where IoT provides situational awareness but not autonomous intelligence. AIoT transforms this architecture through three critical capabilities: edge intelligence (AI inference running locally on IoT devices rather than cloud), predictive analytics (machine learning models forecasting future states from sensor data), and autonomous control (AI systems making decisions and actuating physical systems without human intervention). This shift from descriptive analytics (what happened?) to prescriptive intelligence (what should we do?) represents AIoT’s fundamental value proposition.

Edge AI inference addresses bandwidth, latency, and privacy limitations of cloud-centric IoT. Transmitting raw sensor data to cloud for analysis consumes network bandwidth (costly for cellular IoT, limited for satellite connectivity), introduces latency (milliseconds to seconds for round-trip cloud communication versus microseconds for local processing), and raises privacy concerns (sensitive data must leave devices for analysis). Edge AI runs inference models locally on IoT endpoints or nearby gateways, processing sensor data where it’s generated and transmitting only high-level insights, alerts, or aggregate statistics. NVIDIA’s Jetson platform illustrates edge AI economics: a Jetson Orin module consuming 15 watts processes 275 trillion operations per second (TOPS), sufficient for real-time computer vision, speech recognition, or anomaly detection—enabling intelligent endpoints that would overwhelm networks and cloud infrastructure if centralized. Research from Gartner projects that by 2025, 75% of enterprise-generated data will be processed outside centralized data centers, up from 10% in 2018—reflecting AIoT’s architectural shift toward distributed intelligence.

Predictive analytics leverages machine learning trained on historical sensor data to forecast equipment failures, demand fluctuations, or system anomalies before they occur—enabling proactive intervention rather than reactive response. Predictive maintenance exemplifies this capability: instead of fixed maintenance schedules (replacing parts every X operating hours regardless of condition) or reactive maintenance (repairing after failures), AIoT systems analyze vibration sensors, temperature profiles, acoustic signatures, and electrical current to predict component degradation. Siemens’ MindSphere industrial IoT platform implements predictive maintenance across 1,200+ manufacturing facilities, reducing unplanned downtime by 47% through early fault detection (identifying bearing failures 72 hours before breakdown, motor insulation degradation 2 weeks before failure) while cutting maintenance costs 23% by avoiding premature part replacement. The system combines physics-based models (digital twins simulating equipment behavior) with data-driven machine learning (identifying statistical patterns correlating with failures), achieving prediction accuracy exceeding 92% for critical rotating equipment.

Autonomous control loops close the intelligence cycle by enabling AIoT systems to act on insights without human approval—detecting anomalies, adjusting parameters, or invoking responses automatically. Smart thermostats exemplify consumer AIoT: sensors detect occupancy and temperature, ML models predict heating/cooling needs based on schedules and weather forecasts, then autonomous control adjusts HVAC without user input—Nest thermostats save 10-12% on heating and 15% on cooling according to independent studies by analyzing 340 million hours of real-world data. Industrial applications exhibit greater complexity: oil refineries use AIoT to optimize process parameters across hundreds of interdependent variables (temperature, pressure, flow rates, catalyst concentrations), with reinforcement learning agents adjusting setpoints 50× per second to maximize efficiency while maintaining safety constraints—ExxonMobil reported $240 million annual savings across 11 refineries through autonomous AI optimization, equivalent to 2-3% efficiency improvement. However, autonomous control requires rigorous safety validation: AI systems must degrade gracefully during sensor failures, respect hard limits preventing dangerous states, and provide human override capabilities—engineering challenges that differentiate production AIoT from research demonstrations.

AIoT Use Cases Across Industries

The convergence of AI and IoT creates transformative opportunities across sectors, with early deployments demonstrating both substantial value and implementation challenges requiring careful engineering.

Smart manufacturing and Industry 4.0 leverage AIoT for quality control, process optimization, and supply chain coordination. Bosch’s semiconductor fabrication facilities deploy 340,000+ IoT sensors per factory monitoring temperature, humidity, vibration, particle counts, and equipment parameters, generating 8 petabytes of data annually that AI analyzes for quality prediction and yield optimization. Computer vision AI inspects 100% of manufactured parts (versus 1-5% sampling with manual inspection), detecting defects at 99.7% accuracy while identifying root causes—discovering that microscopic temperature variations in lithography equipment (±0.3°C fluctuations) caused defect clusters costing $12 million annually in yield loss. Addressing this issue through AI-guided thermal management increased yields 1.2 percentage points, worth $47 million for Bosch’s $4 billion annual semiconductor production. Predictive maintenance extends equipment lifespan: Fanuc’s FIELD system (Factory Intelligence Edge Loop Development) monitors industrial robots and CNC machines, predicting failures 4-6 weeks in advance with 85% accuracy—enabling scheduled maintenance during planned downtime rather than disruptive emergency repairs. Manufacturers implementing comprehensive AIoT report 15-30% productivity gains through combined effects of reduced downtime, improved quality, and optimized throughput.

Smart cities and infrastructure apply AIoT to traffic management, energy distribution, waste collection, and public safety. Barcelona’s Sentilo IoT platform integrates 20,000+ sensors monitoring air quality, noise levels, traffic flow, parking availability, and streetlight status, with AI optimizing city operations in real-time. Traffic management AI adjusts signal timing based on congestion patterns, reducing average commute times 21% while cutting emissions 15% through smoother traffic flow (fewer stop-and-go cycles). Smart lighting dims streetlights in low-pedestrian areas during off-peak hours, saving €2.4 million annually in electricity costs while maintaining public safety through brightness adaptation when motion sensors detect people. Waste management IoT sensors in trash bins alert collection services when containers reach 85% capacity, enabling dynamic routing versus fixed schedules—reducing collection vehicle fuel consumption 40% while improving service reliability. Copenhagen’s climate-resilient infrastructure uses AIoT-enabled stormwater management: 300+ IoT-connected retention basins with smart valves open/close based on weather forecasts and real-time precipitation data, preventing urban flooding while optimizing water storage for drought periods—system prevented an estimated €47 million flood damage during August 2024 extreme weather event. However, smart city AIoT faces challenges including privacy concerns (pervasive sensing enables surveillance), integration complexity (connecting heterogeneous systems across departments and vendors), and cybersecurity risks (critical infrastructure vulnerable to attacks).

Healthcare and remote patient monitoring combine wearable IoT sensors with AI diagnostics for continuous health surveillance beyond clinical settings. Abbott’s Libre Sense glucose monitors track blood sugar continuously via subcutaneous sensors, transmitting data to smartphones where AI detects dangerous trends (hypoglycemia, excessive variability) and alerts patients before symptoms appear—reducing diabetic emergencies 67% among 340,000 monitored patients according to real-world evidence studies. The system learns individual glycemic patterns, providing personalized dietary recommendations (avoiding specific foods causing glucose spikes, timing insulin doses optimally) through reinforcement learning. Cardiology AIoT includes wearable ECG monitors detecting atrial fibrillation (irregular heart rhythm increasing stroke risk 5×): AliveCor’s KardiaMobile device records single-lead ECG on-demand, with FDA-cleared AI classifying rhythms at 97% accuracy matching cardiologist interpretation—enabling early AFib detection and anticoagulation therapy preventing strokes. Remote patient monitoring reduces healthcare costs through early intervention (treating conditions before emergency room visits or hospitalizations) and enabling home care for chronic conditions otherwise requiring facility-based monitoring: Medicare beneficiaries using RPM experienced 38% fewer hospital readmissions and 31% lower costs according to CMS demonstration project data. However, clinical AIoT must satisfy stringent regulatory requirements (FDA clearance, HIPAA compliance, clinical validation studies) and overcome technical challenges including battery life (medical devices need months-long operation without recharging), reliability (false alarms cause alert fatigue while missed detections risk patient safety), and interoperability (integrating with electronic health records and clinical workflows).

Agriculture and precision farming exemplify AIoT’s environmental and economic benefits through resource optimization. Beyond John Deere’s precision planting, agricultural AIoT encompasses irrigation management, pest/disease detection, and yield prediction. CropX’s soil monitoring IoT sensors measure moisture and salinity at multiple depths, with AI recommending irrigation schedules optimized for crop water needs, weather forecasts, and soil conditions—reducing water consumption 25-40% while maintaining or improving yields by avoiding both under- and over-watering. California almond growers using CropX saved an average of 42 acre-feet of water per 100 acres during 2023 drought, worth $23,000 per farm while supporting state water conservation mandates. Computer vision drones survey fields identifying disease and pest infestations: Blue River Technology’s See & Spray system uses cameras recognizing individual plants, differentiating crops from weeds at 99% accuracy, then precisely applies herbicide only to weeds via targeted micro-spraying—reducing herbicide usage 90% while controlling resistance evolution (precise application enables using stronger, more expensive herbicides economically since volumes decrease). Livestock monitoring AIoT tracks animal health through wearable sensors detecting behavior changes indicating illness: Allflex’s SenseHub monitors cattle activity, rumination patterns, and temperature, predicting health issues 3-5 days before visible symptoms appear—enabling early veterinary intervention reducing mortality and improving welfare while alerting farmers to optimal breeding windows (heat detection for artificial insemination, increasing conception rates from 40% to 67%).

Technical Challenges and Implementation Considerations

Deploying production AIoT systems requires addressing challenges spanning connectivity, power management, security, and AI model deployment that research prototypes often sidestep but that prevent commercial scale.

Connectivity and bandwidth constraints affect IoT endpoints in remote locations or mobile applications lacking reliable high-speed internet. While 5G promises ubiquitous high-bandwidth wireless connectivity, deployment remains incomplete (covering 30% of US geography as of 2024) and many IoT deployments span decades of infrastructure lifespan beyond 5G’s upgrade cycle. Low-power wide-area networks (LPWAN) like LoRaWAN and NB-IoT enable long-range communication at kilobits-per-second bandwidth—sufficient for sensor telemetry but insufficient for raw video, high-frequency time series, or large AI model updates. This necessitates edge AI performing inference locally, transmitting only results (anomaly detected: yes/no, predicted failure: 72 hours, object detected: vehicle) requiring bytes versus megabytes for raw sensor data. However, edge inference introduces model update challenges: deploying updated AI models to distributed IoT fleets requires over-the-air (OTA) firmware updates consuming bandwidth and risking device bricking if interrupted. Organizations implement progressive rollouts (updating small device subsets first, validating performance, then expanding), differential updates (transmitting only model changes versus full models), and model versioning (supporting multiple concurrent model versions during transitions)—software distribution challenges reminiscent of mobile app updates but across heterogeneous hardware with diverse connectivity.

Power constraints dominate IoT endpoint design, particularly battery-powered sensors deployed for years without maintenance access. Conventional AI accelerators consuming 10-100 watts are infeasible for battery-powered endpoints where entire device power budget is milliwatts. This drives adoption of ultra-low-power AI accelerators (Google’s Edge TPU, ARM’s Ethos-U, Syntiant’s NDP), specialized AI algorithms (binary neural networks, pruning, quantization reducing model size 10-100×), and duty-cycled operation (sensors sleep between measurements, waking only when triggers indicate interesting events). Neuromorphic computing (covered in related post) offers radical efficiency through event-driven computation—but requires algorithm redesign and accepts limited model types. Energy harvesting (solar, vibration, thermal, RF) extends battery life or enables battery-free operation: EnOcean’s self-powered sensors harvest energy from motion (switch presses, door openings) to transmit status wirelessly without batteries—suitable for building automation where replacing batteries in thousands of sensors proves impractical. However, harvested energy is intermittent and limited (microwatts to milliwatts), requiring AI models operating within harsh energy budgets or using energy storage (supercapacitors) bridging gaps between harvesting opportunities.

Security and privacy pose existential risks for AIoT given attack surface expansion: every IoT endpoint represents a potential entry point for network intrusion, and compromised devices enable surveillance, data exfiltration, or physical sabotage. The Mirai botnet exemplifies IoT security failures: malware compromised 600,000+ IoT devices (cameras, routers, DVRs) through default passwords, recruiting them into distributed denial-of-service army that disabled major internet services in 2016. AIoT security requires defense-in-depth: secure boot (verifying firmware integrity before execution), encrypted communication (TLS/DTLS protecting data in transit), secure enclaves (hardware-isolated execution environments for sensitive computations), and access controls (authentication and authorization for device commands). However, IoT devices often lack computational resources for cryptography and security protocols, use outdated software without update mechanisms, and remain deployed for decades accumulating vulnerabilities—creating persistent attack vectors. Privacy concerns intensify with AI: while raw sensor data might reveal limited information, AI inferences extract sensitive attributes (speech recognition enables eavesdropping, gait analysis identifies individuals, energy consumption patterns infer behaviors). Privacy-preserving techniques including federated learning (training AI models across distributed devices without centralizing data), differential privacy (adding noise preventing individual data reconstruction), and homomorphic encryption (computing on encrypted data) address concerns but impose performance and accuracy costs limiting practical adoption.

Model deployment and lifecycle management create operational complexity scaling with fleet size. Organizations managing millions of IoT endpoints must track which devices run which model versions, schedule model updates minimizing disruption, validate model performance across diverse environments, and rollback if updates degrade accuracy—challenges analogous to managing software deployments but complicated by hardware heterogeneity (devices with varying compute capabilities), intermittent connectivity (devices offline for hours-days), and field conditions (models trained on laboratory data failing in real-world environments due to distribution shift). MLOps practices address these challenges through automated model versioning, A/B testing (deploying model variants to device subsets, comparing performance), observability (monitoring model accuracy, latency, resource utilization across device fleet), and continuous retraining (periodically updating models incorporating new data reflecting environment changes). However, AIoT MLOps remains immature compared to cloud ML: tooling fragmented across vendors, standards lacking for model packaging and deployment, and edge constraints (limited compute, intermittent connectivity) prevent directly applying cloud MLOps patterns—active research area where solutions are emerging but not yet standardized.

The AIoT Technology Stack: Hardware, Software, and Platforms

Implementing AIoT requires integrated technology stacks spanning edge hardware, connectivity protocols, AI frameworks, and cloud platforms—with ecosystem maturity varying across components.

Edge AI hardware has proliferated as multiple vendors target the spectrum from ultra-low-power microcontrollers to high-performance edge servers. Microcontroller-class devices include ARM Cortex-M processors with AI extensions (executing simple neural networks at milliwatt power), STMicroelectronics’ STM32 family integrating sensors and AI accelerators on single chips, and Syntiant’s NDP120 neural decision processor achieving keyword spotting at 140 microwatts—suitable for battery-powered endpoints performing inference hundreds of times per second. Mid-range edge processors include Google Coral TPU (4 TOPS at 2 watts enabling real-time object detection), Intel Movidius VPU (computer vision processor optimized for video analytics at 1-5 watts), and Qualcomm’s Cloud AI platforms integrating cellular modems with AI accelerators for mobile/automotive applications. High-performance edge servers include NVIDIA Jetson AGX Orin (275 TOPS at 15-60 watts configurable), enabling autonomous vehicles, industrial inspection, and multi-sensor fusion—essentially datacenters-in-a-box for applications justifying hardware costs ($1,000-2,000) and power budgets. Hardware selection depends on application requirements: a soil moisture sensor needs microcontroller-class AI analyzing readings once per hour, while autonomous robot requires high-performance edge server fusing camera, lidar, and IMU data 50 times per second.

AIoT connectivity spans short-range (Bluetooth, Zigbee, Wi-Fi), wide-area (cellular 4G/5G, LoRaWAN, NB-IoT, Sigfox), and mesh networks where devices relay messages multi-hop rather than connecting directly to gateways. Protocol selection involves tradeoffs among bandwidth, power consumption, range, and latency. Bluetooth Low Energy (BLE) suits wearables and consumer devices within 10-50m range, consuming milliwatts but providing limited bandwidth (1 Mbps) and requiring smartphone/gateway intermediary for internet connectivity. Wi-Fi 6 offers high bandwidth (gigabits per second) but consumes 100+ milliwatts limiting battery-powered applications. LoRaWAN achieves 10-15km range at 0.1-1 kilobits per second bandwidth consuming 10 milliwatts—ideal for infrequent low-data transmissions (sensor readings every 15 minutes) but unsuitable for video or high-frequency telemetry. 5G promises best-of-all-worlds (high bandwidth, low latency, wide area coverage, device slicing enabling power-efficient modes), but deployment remains limited and module costs ($50-100) exceed simpler radios ($5-10)—relegating 5G AIoT to high-value applications until economies of scale reduce costs.

AI frameworks and model formats enable developers to build, train, and deploy models across AIoT’s heterogeneous hardware landscape. TensorFlow Lite and PyTorch Mobile provide model compression (quantization, pruning) and optimized inference runtimes for mobile/edge devices, supporting deployment on Android, iOS, and embedded Linux platforms. Apache TVM compiles neural networks to optimized code for specific hardware targets, generating efficient implementations for ARM, x86, and specialized accelerators from high-level model descriptions—enabling write-once, deploy-anywhere workflows insulating developers from hardware differences. ONNX (Open Neural Network Exchange) standardizes model representation enabling interoperability: train in PyTorch, convert to ONNX, deploy with TensorFlow Lite or vendor-specific runtimes—reducing vendor lock-in and enabling hardware portability. However, model format fragmentation persists: NVIDIA uses TensorRT for Jetson optimization, Google uses Coral-specific formats, microcontroller vendors support different quantization schemes—requiring developers to maintain multiple model variants or sacrifice performance using lowest-common-denominator formats.

Cloud platforms and AIoT services provide infrastructure for device management, data ingestion, model training, and application development. AWS IoT combines device connectivity (IoT Core), edge computing (IoT Greengrass), AI/ML services (SageMaker), and analytics (IoT Analytics) in integrated stack—enabling developers to build end-to-end AIoT solutions on single platform. Microsoft Azure’s IoT Suite, Google Cloud IoT, and Siemens MindSphere offer similar capabilities with varying emphases (Azure emphasizes enterprise integration, Google emphasizes AI/ML, Siemens emphasizes industrial domain knowledge). These platforms abstract complexity including device provisioning (securely onboarding thousands of devices), OTA updates (deploying firmware and models), time-series storage (efficiently storing sensor data), and model deployment (packaging and distributing AI models to edge devices)—enabling developers to focus on application logic rather than infrastructure plumbing. However, platform lock-in concerns arise: applications built on AWS IoT Greengrass don’t easily migrate to Azure IoT Edge due to different programming models and APIs—vendor switching costs discourage multi-cloud strategies prevalent in conventional cloud computing.

The Future of AIoT: Trends and Opportunities

AIoT continues evolving rapidly through technology advances in 5G connectivity, edge AI chips, federated learning, and digital twins—trends that will shape the next 3-5 years of deployment.

5G and edge computing convergence enables low-latency applications requiring sub-10 millisecond response times—autonomous vehicles, industrial robotics, remote surgery—infeasible with cloud round-trips. Multi-access edge computing (MEC) deploys compute infrastructure at cellular network edge (base stations, aggregation points), enabling AIoT devices to offload processing to nearby edge servers rather than distant cloud datacenters—combining local processing’s latency advantages with cloud’s scalability. NVIDIA and telecommunications providers are deploying AI-on-5G edge clouds supporting smart city video analytics, augmented reality, and connected vehicles—applications requiring processing thousands of video streams within cell tower coverage areas where centralizing to cloud would overwhelm backhaul networks. However, MEC faces business model challenges: who pays for edge infrastructure (device owners, application providers, network operators), and how are costs allocated?

Federated learning and privacy-preserving AI enable training models on distributed IoT data without centralizing sensitive information. Instead of uploading data to cloud for training, federated learning trains model replicas locally on each device, then aggregates model updates (gradients) centrally without exposing raw data. Google pioneered federated learning for Android keyboard prediction training on millions of smartphones without seeing typed text. AIoT applications include medical devices (training disease detection models on patient data without HIPAA violations), smart homes (personalizing automation without revealing behaviors), and industrial IoT (pooling knowledge across factories without exposing proprietary process data). Challenges include communication efficiency (transmitting model updates frequently strains bandwidth), heterogeneity (devices with different data distributions, compute capabilities, availability patterns), and security (verifying aggregated updates don’t leak private information through inference attacks)—active research areas where solutions are maturing but not yet production-ready for all scenarios.

Digital twins and simulation-driven AI create virtual replicas of physical IoT systems, enabling what-if analysis, predictive maintenance, and AI training on simulated data. Siemens uses digital twins modeling entire factories—virtual representations synchronized with physical facility through IoT sensor data, simulating proposed process changes before implementation. AI trained on digital twin simulations learns optimal control policies (adjusting equipment parameters), tested virtually before deploying to physical systems—avoiding costly real-world trial-and-error. NASA maintains digital twins of spacecraft enabling ground teams to diagnose anomalies and test recovery procedures on virtual replica while actual spacecraft remains operational—critical for irreparable space systems where failures are catastrophic. Challenges include model fidelity (ensuring virtual twin accurately represents physical system), synchronization (keeping digital twin updated as physical system evolves), and computational cost (high-fidelity simulation requires substantial compute resources)—tradeoffs between simulation realism and practicality.

AIoT marketplaces and ecosystems are emerging, enabling third-party developers to create applications leveraging device manufacturer’s IoT platforms—analogous to app stores for smartphones. Samsung’s SmartThings, Apple’s HomeKit, and industrial platforms like Siemens MindSphere provide APIs enabling developers to build applications consuming IoT data and controlling devices. This ecosystem approach accelerates innovation by enabling specialization: device manufacturers focus on hardware and data collection, while software developers create vertical applications for specific industries or use cases. However, fragmentation persists: dozens of incompatible IoT platforms prevent universal interoperability—smart home devices from different manufacturers often can’t communicate, and industrial IoT systems from different vendors require costly integration projects. Industry initiatives like Matter (smart home interoperability standard) and OPC UA (industrial automation standard) aim to address fragmentation, but widespread adoption remains years away.

Conclusion and Strategic Implications

The convergence of AI and IoT is creating intelligent systems that sense, reason, and act autonomously across industries, delivering measurable benefits including cost reduction, efficiency gains, and new capabilities previously infeasible. Key insights include:

- Demonstrated ROI: John Deere’s ExactShot precision planting reduced input costs $47M across 340 farms; Siemens’ predictive maintenance cut unplanned downtime 47% across 1,200 facilities

- Edge intelligence shift: Gartner projects 75% of enterprise data processed at edge by 2025, up from 10% in 2018, driven by bandwidth, latency, and privacy requirements

- Cross-industry transformation: AIoT applications span manufacturing (quality control, predictive maintenance), smart cities (traffic, energy, waste), healthcare (remote monitoring), and agriculture (precision farming)

- Technical challenges persist: Connectivity constraints, power limitations, security vulnerabilities, and model deployment complexity require careful engineering beyond research prototypes

- Ecosystem maturity varies: Edge AI hardware proliferating with options from $1 microcontrollers to $2,000 edge servers; software frameworks maturing but fragmented across vendors

Organizations should develop AIoT strategies aligned with business priorities: manufacturers focus on predictive maintenance and quality control where ROI is clearest; cities pursue integrated platforms coordinating traffic, energy, and services; healthcare providers pilot remote monitoring reducing hospitalization costs. Starting with well-scoped pilots demonstrating value, then expanding systematically as capabilities and ecosystem mature, enables learning while managing risk—avoiding both premature scaling of immature technology and competitive disadvantage from failing to adopt transformative capabilities. The future belongs to systems that are genuinely intelligent—sensing, reasoning, and acting autonomously—rather than merely connected.

Sources

- Gartner. (2023). Top Strategic Technology Trends for 2024: AIoT. Stamford, CT: Gartner Research. https://www.gartner.com/en/articles/gartner-top-10-strategic-technology-trends-for-2024

- Shi, W., et al. (2016). Edge computing: Vision and challenges. IEEE Internet of Things Journal, 3(5), 637-646. https://doi.org/10.1109/JIOT.2016.2579198

- Deng, S., et al. (2020). Edge intelligence: The confluence of edge computing and artificial intelligence. IEEE Internet of Things Journal, 7(8), 7457-7469. https://doi.org/10.1109/JIOT.2020.2984887

- Khan, W. Z., et al. (2020). Edge computing: A survey. Future Generation Computer Systems, 97, 219-235. https://doi.org/10.1016/j.future.2019.02.050

- Zhou, Z., et al. (2019). Edge intelligence: Paving the last mile of artificial intelligence with edge computing. Proceedings of the IEEE, 107(8), 1738-1762. https://doi.org/10.1109/JPROC.2019.2918951

- Xu, D., et al. (2021). Privacy-preserving machine learning: Threats and solutions. IEEE Security & Privacy, 19(2), 12-23. https://doi.org/10.1109/MSEC.2020.3021871

- Rieke, N., et al. (2020). The future of digital health with federated learning. NPJ Digital Medicine, 3, 119. https://doi.org/10.1038/s41746-020-00323-1

- Taleb, T., et al. (2017). On multi-access edge computing: A survey of the emerging 5G network edge cloud architecture and orchestration. IEEE Communications Surveys & Tutorials, 19(3), 1657-1681. https://doi.org/10.1109/COMST.2017.2705720

- Sisinni, E., et al. (2018). Industrial Internet of Things: Challenges, opportunities, and directions. IEEE Transactions on Industrial Informatics, 14(11), 4724-4734. https://doi.org/10.1109/TII.2018.2852491