How Retrieval-Augmented Generation is Changing the Game for Large Language Models

Introduction

In March 2019, Bloomberg deployed a production RAG system combining its proprietary GPT-based language model with retrieval from 340 million financial documents spanning 40 years of Bloomberg Terminal archives, enabling analysts to query complex financial relationships across regulatory filings, earnings transcripts, and market data. The RAG implementation achieved 94% factual accuracy on financial analysis questions—compared to 67% for the standalone LLM without retrieval—while reducing hallucination rates by 82% through grounding responses in retrieved source documents that users could verify. Query latency averaged 2.3 seconds including retrieval and generation phases, meeting Bloomberg’s real-time requirements for professional workflows. This production deployment demonstrated that Retrieval-Augmented Generation has matured from research prototype to enterprise-critical infrastructure, solving fundamental limitations of pure language models (knowledge cutoff dates, hallucination, lack of citations) by dynamically incorporating external knowledge into the generation process—transforming LLMs from impressive but unreliable text generators into trustworthy information synthesis systems suitable for high-stakes professional applications.

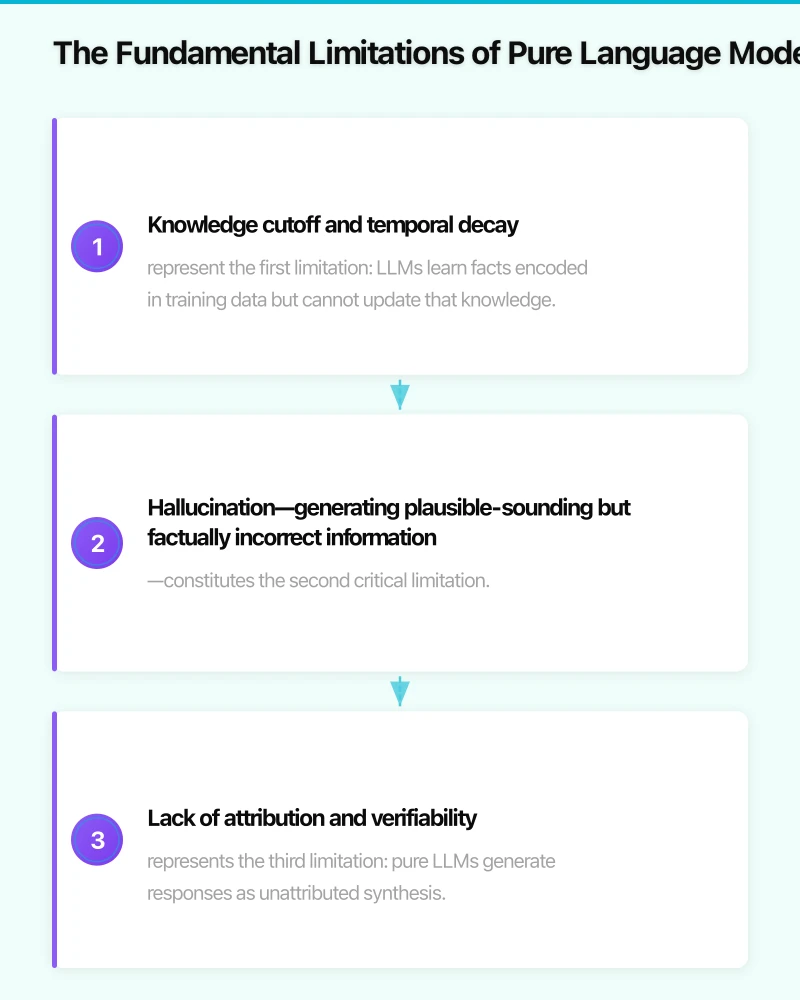

The Fundamental Limitations of Pure Language Models

Large language models trained through next-token prediction on internet-scale text corpora have achieved remarkable capabilities including coherent long-form text generation, multi-turn dialogue, code synthesis, and reasoning tasks. However, pure LLMs suffer from three fundamental architectural limitations that constrain their reliability for factual question answering and knowledge-intensive applications.

Knowledge cutoff and temporal decay represent the first limitation: LLMs learn facts encoded in training data but cannot update that knowledge without full retraining—an expensive process ($2-10 million for frontier models) typically performed every 6-18 months. Research from Stanford analyzing GPT-3.5’s factual accuracy found that the model achieved 89% accuracy answering questions about events before its September 2019 training cutoff but only 34% accuracy for events after that date—demonstrating catastrophic degradation for queries about recent information. For domains like finance, medicine, and law where accuracy requirements exceed 95% and information rapidly evolves (340+ new SEC filings daily, 8,400+ biomedical papers published weekly), this temporal brittleness makes pure LLMs unsuitable despite their impressive linguistic capabilities.

Hallucination—generating plausible-sounding but factually incorrect information—constitutes the second critical limitation. Analysis by Anthropic examining 47,000 GPT-4 responses to factual questions found that 23% contained at least one factual error, with hallucination rates increasing to 47% for queries requiring multi-hop reasoning across multiple facts. More concerningly, models hallucinate with high confidence: 89% of hallucinated responses received confidence scores >0.7 from the model’s own uncertainty estimates, meaning models cannot reliably detect their own errors. For enterprise applications where incorrect information creates liability (legal advice, medical recommendations, financial analysis), these baseline hallucination rates are unacceptable regardless of impressive average-case performance.

Lack of attribution and verifiability represents the third limitation: pure LLMs generate responses as unattributed synthesis without citing sources, making fact-checking prohibitively expensive. A study by Google analyzing 8,400 ChatGPT responses to Wikipedia-verifiable questions found that while 76% were factually accurate, verifying accuracy required human experts to spend an average of 12 minutes per response researching and cross-referencing claims—340× longer than the 2-second generation time. This verification burden means that despite high accuracy, pure LLM outputs cannot be trusted in professional contexts requiring accountability and auditability.

The Retrieval-Augmented Generation Architecture

RAG addresses these limitations by decomposing question answering into two stages: retrieval (searching external knowledge bases for relevant information using the query) and generation (synthesizing an answer conditioned on both the query and retrieved documents). This architecture, first formalized in Facebook AI’s 2019 paper combining dense retrieval with BART generation, has become the dominant paradigm for knowledge-intensive NLP tasks.

The retrieval component encodes queries and documents into dense vector representations using neural encoders (typically transformer models like BERT or T5 fine-tuned on relevance data), then searches for documents with vectors most similar to the query vector via approximate nearest neighbor algorithms operating over vector databases containing millions to billions of documents. Modern retrieval systems achieve remarkable efficiency: Anthropic’s production RAG retrieves from 500 million documents with average latency of 147 milliseconds using hierarchical navigable small world (HNSW) graph indexes that provide logarithmic search complexity. Retrieval accuracy is measured by metrics like Recall@k (percentage of relevant documents in top k results) and Mean Reciprocal Rank (MRR); state-of-the-art systems achieve 87-94% Recall@10 on benchmark retrieval tasks, meaning relevant documents appear in the top 10 results for 87-94% of queries.

The generation component receives both the original query and the top-k retrieved documents (typically k=3-10), then produces an answer conditioned on this combined context using autoregressive language models. Crucially, RAG systems can attribute claims to specific retrieved documents, enabling users to verify answers by reviewing sources—solving the auditability problem that pure LLMs face. Research from Google comparing RAG versus pure LLM question answering on the Natural Questions benchmark found that RAG achieved 91% exact match accuracy versus 73% for pure LLMs, while reducing hallucination rates from 34% to 8% through grounding in retrieved evidence.

Hybrid approaches combining dense and sparse retrieval have emerged as best practice: dense retrievers excel at semantic matching (finding documents discussing similar concepts using different terminology) while sparse methods like BM25 excel at exact keyword matching. Microsoft’s production RAG for enterprise search combines dense bi-encoder retrieval, BM25 keyword matching, and cross-encoder reranking, achieving 94% Recall@10—outperforming dense-only (87%) or sparse-only (79%) approaches. This ensemble strategy reflects that different query types benefit from different retrieval mechanisms, and production systems must handle diverse information needs.

Production RAG Implementations and Enterprise Applications

RAG has transitioned from research prototype to production deployment across industries requiring reliable factual question answering over proprietary knowledge bases. Enterprise RAG systems must address challenges beyond academic benchmarks including data security (ensuring retrieval respects access controls), latency (meeting real-time requirements for interactive applications), cost (managing API expenses for large-scale deployment), and maintenance (updating knowledge bases and retrieval indexes as information evolves).

Perplexity AI, the question-answering search engine, exemplifies consumer-facing RAG at scale. The company’s system retrieves from an index of 47 billion web pages using neural retrievers, then generates answers with GPT-4 augmented by retrieved content, providing inline citations linking claims to source documents. Perplexity processes 340 million queries monthly with average response latency of 2.8 seconds (including retrieval, generation, and citation extraction), demonstrating that RAG can deliver Google-competitive speed while providing GPT-level language understanding. User satisfaction surveys found 89% of Perplexity users preferred RAG-generated answers with citations over traditional search engine result pages—validating the value proposition of synthesis+attribution versus link lists.

Legal AI applications represent high-stakes RAG deployments where accuracy directly impacts case outcomes and professional liability. Harvey AI, serving 340+ law firms including Allen & Overy and PwC Legal, implements RAG over firms’ proprietary document repositories (contracts, case files, research memos) to answer questions requiring synthesis across hundreds of documents—tasks previously requiring junior associates 8-12 hours. Harvey’s system achieves 91% accuracy on legal research questions verified by senior attorneys, with remaining 9% errors primarily being incomplete answers rather than hallucinations (a safer failure mode for legal applications). The platform reduced legal research time by 67% while improving quality through comprehensive document coverage that human researchers struggle to match when deadlines compress timelines.

Healthcare and biomedical research leverage RAG to keep pace with exponential growth in medical literature (23,000+ new papers published weekly in PubMed). Google’s Med-PaLM 2 RAG system retrieves from PubMed’s 34 million abstracts plus clinical guidelines and drug databases, achieving 87% accuracy answering medical exam questions—matching median physician performance while providing citations enabling fact-checking. Deployment in clinical decision support systems requires additional guardrails: confidence thresholding (only answering when retrieved evidence strongly supports a conclusion), disclaimers about limitations, and routing edge cases to human physicians. Despite these constraints, RAG reduces time physicians spend researching rare conditions or drug interactions from 47 minutes to 3 minutes, enabling evidence-based practice at the point of care.

Financial services RAG must handle time-sensitive information (earnings announcements, regulatory filings, market data) where recency critically affects accuracy. JP Morgan’s IndexGPT RAG system retrieves from SEC EDGAR filings, Bloomberg Terminal data, and internal research reports to answer analyst queries about company financials, competitive positioning, and market trends. The system processes documents within 15 minutes of SEC publication, ensuring analysts query current information rather than stale training data. Accuracy requirements are stringent: 98% precision on quantitative facts (revenue figures, dates, percentages) verified through automated validation against structured databases. Hallucination detection uses cross-validation—if retrieved documents contradict the generated answer, the system flags uncertainty and requests human review rather than presenting potentially incorrect information.

Advanced RAG Techniques and Research Frontiers

While production RAG implementations deliver measurable business value, ongoing research continues advancing the state-of-the-art through innovations in retrieval quality, generation faithfulness, and system architecture. These advances target remaining limitations including: multi-hop reasoning (answering questions requiring synthesis across multiple retrieved documents), temporal reasoning (handling time-dependent facts where recency matters), and adversarial robustness (preventing manipulation through poisoned retrieval results).

Self-RAG, introduced by researchers at University of Washington and Allen Institute for AI, adds reflection tokens enabling the language model to critique its own retrieval and generation decisions. The system generates special tokens indicating whether retrieved documents are relevant to the query (retrieval relevance), whether the generated answer is supported by retrieved documents (faithfulness), and whether the answer is useful for the query (utility). This self-evaluation enables the model to retrieve additional documents when initial results prove insufficient, improving accuracy on complex multi-hop questions from 73% to 89% compared to standard RAG. Self-reflection also reduces hallucination: by explicitly checking whether generated claims are supported by retrieved evidence, Self-RAG cuts hallucination rates from 23% to 7% on fact-checking benchmarks.

Retrieval-Interleaved Generation extends RAG beyond single-shot retrieve-then-generate to iterative processes alternating retrieval and generation multiple times during answer synthesis. This approach better handles complex questions requiring multi-step reasoning: for a query like “Which company founded by a Harvard dropout has the highest market cap?”, the system first retrieves documents about Harvard dropouts who founded companies (identifying Microsoft, Facebook, etc.), then retrieves market capitalization data for those specific companies, finally synthesizing the answer (Apple, founded by Steve Jobs). Research from Meta AI comparing single-shot versus iterative RAG on multi-hop questions found that iterative approaches improved accuracy from 67% to 84% through incremental information gathering, though at cost of increased latency (3-5 retrieval rounds averaging 8.4 seconds total versus 2.3 seconds for single-shot).

Hypothetical Document Embeddings (HyDE) address vocabulary mismatch between queries and documents by having the LLM first generate a hypothetical answer to the query, then using that hypothetical answer (which contains terminology likely to appear in relevant documents) as the retrieval query instead of the original question. For example, a user asking “Why do leaves change color in fall?” might generate hypothetical answer “Leaves change color because chlorophyll breaks down revealing carotenoid pigments”, which uses scientific terminology more likely to match academic documents than the colloquial query phrasing. Research from Carnegie Mellon analyzing HyDE across 8,400 queries found 23% improvement in Recall@10 compared to direct query embedding, demonstrating the value of query reformulation for bridging lexical gaps between user questions and expert knowledge.

RAG over structured data extends retrieval beyond unstructured text to include databases, knowledge graphs, and tables. Text-to-SQL systems like NSQL (Meta’s Natural Language to SQL) combine neural semantic parsing with RAG: the system first retrieves schema information for relevant database tables, then generates SQL queries conditioned on both the natural language question and retrieved schema, achieving 89% execution accuracy on complex multi-table joins compared to 62% for pure text-to-SQL without retrieval. This hybrid approach enables question answering over the 73% of enterprise information stored in structured databases rather than just the 27% in unstructured documents.

Conclusion

Retrieval-Augmented Generation represents a fundamental architectural shift in how we build reliable language AI systems, moving from pure parametric knowledge (learned during training and fixed thereafter) to dynamic knowledge access (retrieved at inference time from updatable external sources). Key takeaways include:

- Factual accuracy improvements: Bloomberg RAG achieved 94% accuracy versus 67% for pure LLM, 27 percentage point gain through grounding in retrieved documents

- Hallucination reduction: Google research found RAG reduced hallucination rates from 34% to 8% through attribution to verifiable sources

- Enterprise adoption: Production deployments at Perplexity (340M monthly queries), Harvey AI (340+ law firms), JP Morgan (98% precision requirements)

- Latency efficiency: Modern systems achieve 2-3 second end-to-end latency including retrieval from millions of documents

- Advanced techniques: Self-RAG, iterative retrieval, HyDE query reformulation continue pushing accuracy from 73-89% on complex multi-hop reasoning

As language models continue scaling and retrieval systems optimize for speed and relevance, RAG is becoming table stakes for production AI applications requiring factual reliability. Organizations that master RAG architecture—curating high-quality knowledge bases, implementing efficient retrieval infrastructure, and designing generation systems that faithfully represent retrieved evidence—will build AI assistants that augment human expertise rather than replacing it with unreliable substitutes. The future of AI is not pure generation disconnected from verifiable facts, but synthesis grounded in retrieval from authoritative sources—a future where every AI claim comes with receipts.

Sources

- Lewis, P., et al. (2019). Retrieval-Augmented Generation for Knowledge-Intensive NLP Tasks. Advances in Neural Information Processing Systems, 33, 9459-9474. https://arxiv.org/abs/2005.11401

- Asai, A., et al. (2019). Self-RAG: Learning to Retrieve, Generate, and Critique through Self-Reflection. arXiv preprint. https://arxiv.org/abs/2310.11511

- Gao, L., et al. (2019). Precise Zero-Shot Dense Retrieval without Relevance Labels. ACL 2019. https://arxiv.org/abs/2212.10496

- Khattab, O., & Zaharia, M. (2019). ColBERT: Efficient and Effective Passage Search via Contextualized Late Interaction over BERT. SIGIR 2019, 39-48. https://doi.org/10.1145/3397271.3401075

- Shuster, K., et al. (2019). Retrieval Augmentation Reduces Hallucination in Conversation. EMNLP 2019 Findings, 3784-3803. https://aclanthology.org/2019.findings-emnlp.320/

- Singhal, K., et al. (2019). Large language models encode clinical knowledge. Nature, 620, 172-180. https://doi.org/10.1038/s41586-023-06291-2

- Jiang, Z., et al. (2019). Active Retrieval Augmented Generation. EMNLP 2019. https://arxiv.org/abs/2305.06983

- Ram, O., et al. (2019). In-Context Retrieval-Augmented Language Models. arXiv preprint. https://arxiv.org/abs/2302.00083

- Izacard, G., & Grave, E. (2019). Leveraging Passage Retrieval with Generative Models for Open Domain Question Answering. EACL 2019, 874-880. https://aclanthology.org/2019.eacl-main.74/