Enterprise Software Architecture Review Framework: A CTO's Guide to Technical Due Diligence

Introduction

Every technology leader eventually faces the architecture review challenge. Perhaps you’ve inherited a system through acquisition. Maybe you’re evaluating vendor solutions. Or you’re assessing whether your own systems can support the next phase of growth. The question is the same: what’s the real state of this architecture, and what risks lurk beneath the surface?

Architecture reviews conducted superficially miss critical issues. Reviews conducted without structure devolve into opinion battles. Reviews focused narrowly on code quality miss systemic problems in operations, security, or scalability.

This framework provides a structured approach to comprehensive architecture review—the methodology developed through conducting dozens of technical due diligence assessments and post-acquisition architecture evaluations. It transforms subjective impressions into actionable intelligence.

The Architecture Review Framework

Framework Overview

Effective architecture review examines seven interconnected dimensions:

- Scalability and Performance: Can the system handle growth?

- Security Posture: Is the system defensible?

- Operational Maturity: Can the system be maintained reliably?

- Code Quality and Maintainability: Can the system evolve efficiently?

- Technical Debt Assessment: What deferred costs exist?

- Architecture Fitness: Does the architecture serve business needs?

- Team and Knowledge: Can the organisation sustain the system?

Each dimension requires specific investigation techniques, evidence collection, and evaluation criteria. The framework provides both the questions to ask and the methods to validate answers.

Dimension 1: Scalability and Performance

Investigation Focus

Scalability determines whether the system can grow with business demands. This encompasses both horizontal scaling (adding capacity) and vertical scaling (handling increased complexity).

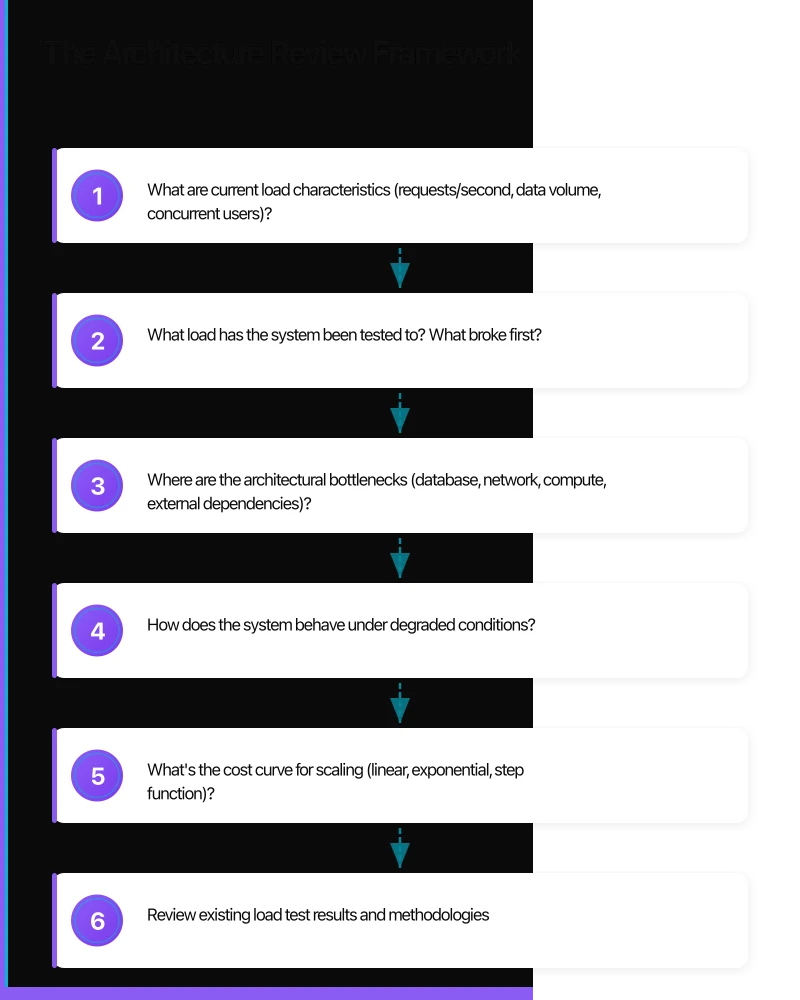

Key Questions

- What are current load characteristics (requests/second, data volume, concurrent users)?

- What load has the system been tested to? What broke first?

- Where are the architectural bottlenecks (database, network, compute, external dependencies)?

- How does the system behave under degraded conditions?

- What’s the cost curve for scaling (linear, exponential, step function)?

Evidence Collection Methods

Load Testing Analysis

- Review existing load test results and methodologies

- Identify maximum tested throughput and failure points

- Assess load test environment fidelity to production

Architecture Topology Mapping

- Document all system components and their scaling characteristics

- Identify stateful components that resist horizontal scaling

- Map data flows and potential bottleneck points

Production Metrics Analysis

- Examine historical resource utilisation (CPU, memory, I/O, network)

- Identify patterns suggesting capacity constraints

- Review incident history related to capacity

Database Scalability Assessment

- Evaluate database architecture (single instance, replicas, sharding)

- Review query performance and indexing strategies

- Assess data growth projections against storage architecture

Evaluation Criteria

| Rating | Criteria |

|---|---|

| Strong | Proven scaling to 10x current load, automated scaling, linear cost curve |

| Adequate | Tested to 3x current load, manual scaling procedures, known bottlenecks with remediation plans |

| Concerning | No load testing, single points of failure, exponential cost scaling |

| Critical | Current load causing issues, no scaling path, fundamental architecture constraints |

Dimension 2: Security Posture

Investigation Focus

Security assessment examines both the defensive architecture and the organisation’s security practices. Technical controls mean little without operational discipline.

Key Questions

- What’s the authentication and authorisation architecture?

- How is sensitive data protected (at rest, in transit, in use)?

- What’s the vulnerability management program maturity?

- How would an attacker move laterally after initial compromise?

- What security incidents have occurred, and what was learned?

Evidence Collection Methods

Architecture Security Review

- Map network segmentation and trust boundaries

- Review authentication mechanisms and identity federation

- Assess encryption implementation (protocols, key management)

- Evaluate API security controls

Vulnerability Assessment

- Review recent penetration test and vulnerability scan results

- Assess remediation timelines for identified vulnerabilities

- Examine patch management processes and currency

Access Control Audit

- Review privileged access management

- Assess service account practices

- Evaluate secrets management implementation

Security Operations Review

- Examine security monitoring and alerting capabilities

- Review incident response procedures and history

- Assess security awareness and training programs

Evaluation Criteria

| Rating | Criteria |

|---|---|

| Strong | Defence in depth, regular pen testing, rapid remediation, mature security operations |

| Adequate | Basic controls in place, periodic testing, reasonable remediation timelines |

| Concerning | Significant control gaps, infrequent testing, slow remediation |

| Critical | Fundamental security flaws, no testing program, active vulnerabilities |

Dimension 3: Operational Maturity

Investigation Focus

Operational maturity determines whether the system can be reliably maintained, monitored, and recovered. This is often where acquired systems reveal their true state.

Key Questions

- What’s the monitoring and observability coverage?

- What are deployment frequency and failure rates?

- How long does incident recovery take?

- What documentation exists for operational procedures?

- What’s the on-call burden and escalation effectiveness?

Evidence Collection Methods

Deployment Pipeline Assessment

- Review CI/CD architecture and automation coverage

- Examine deployment frequency, lead time, and rollback capability

- Assess environment parity (dev, staging, production)

Observability Evaluation

- Inventory monitoring coverage (infrastructure, application, business metrics)

- Review alerting configuration and noise levels

- Assess distributed tracing and log aggregation capabilities

Incident Management Review

- Analyse incident history (frequency, severity, duration)

- Review post-incident analysis quality and follow-through

- Examine on-call rotations and escalation procedures

Disaster Recovery Assessment

- Review backup strategies and tested recovery procedures

- Assess recovery point objectives (RPO) and recovery time objectives (RTO)

- Examine business continuity planning

Evaluation Criteria

| Rating | Criteria |

|---|---|

| Strong | Full observability, automated deployments, under 1hr MTTR, documented procedures |

| Adequate | Good monitoring coverage, semi-automated deployments, reasonable MTTR |

| Concerning | Monitoring gaps, manual deployments, extended outages, sparse documentation |

| Critical | Blind spots in production, deployments fail frequently, no recovery procedures |

Dimension 4: Code Quality and Maintainability

Investigation Focus

Code quality affects velocity of change, defect rates, and long-term maintainability. This dimension examines both current state and trajectory.

Key Questions

- What’s the test coverage and test quality?

- How well-structured is the codebase (modularity, coupling, cohesion)?

- What coding standards exist and are they enforced?

- How difficult is it to onboard new developers?

- What’s the defect rate and defect resolution time?

Evidence Collection Methods

Static Analysis Review

- Run code quality tools (SonarQube, CodeClimate, language-specific linters)

- Assess code complexity metrics (cyclomatic complexity, cognitive complexity)

- Review duplication and code smell indicators

Test Suite Evaluation

- Measure test coverage (line, branch, mutation testing where available)

- Assess test quality (not just quantity)

- Review test execution time and flakiness

Architecture Coherence Assessment

- Evaluate module boundaries and dependency structure

- Identify architectural drift from intended design

- Assess consistency across codebase

Developer Experience Assessment

- Review onboarding documentation and processes

- Assess local development environment setup complexity

- Interview developers on productivity impediments

Evaluation Criteria

| Rating | Criteria |

|---|---|

| Strong | >80% meaningful coverage, low complexity, consistent standards, easy onboarding |

| Adequate | >60% coverage, manageable complexity, documented standards |

| Concerning | under 40% coverage, high complexity, inconsistent patterns |

| Critical | Minimal testing, incomprehensible code, no standards, hostile to change |

Dimension 5: Technical Debt Assessment

Investigation Focus

Technical debt represents deferred costs that will eventually require payment. Understanding debt magnitude, interest rate, and repayment options is crucial for planning.

Key Questions

- What known technical debt exists and why was it incurred?

- What’s the carrying cost of current debt (velocity impact, incident frequency)?

- What debt is strategic (acceptable trade-offs) versus accidental (poor decisions)?

- What debt remediation is in progress or planned?

- What’s the debt trajectory (increasing, stable, decreasing)?

Evidence Collection Methods

Debt Inventory Development

- Catalogue known technical debt items with team input

- Estimate remediation effort for each item

- Assess business impact and carrying cost

Velocity Analysis

- Compare current velocity to historical or industry benchmarks

- Identify velocity trends over time

- Correlate velocity changes with debt accumulation

Incident Correlation

- Map incidents to underlying technical debt

- Calculate incident costs attributable to debt

- Identify debt items causing recurring incidents

Dependency Analysis

- Review third-party dependency currency

- Identify end-of-life or unsupported dependencies

- Assess upgrade path complexity

Evaluation Criteria

| Rating | Criteria |

|---|---|

| Strong | Debt inventory maintained, carrying cost understood, active remediation, decreasing trajectory |

| Adequate | Major debt items known, some remediation, stable trajectory |

| Concerning | Significant unknown debt, high carrying cost, increasing trajectory |

| Critical | Overwhelming debt, velocity approaching zero, debt compounds faster than remediation |

Dimension 6: Architecture Fitness

Investigation Focus

Architecture fitness examines whether the technical architecture serves current and future business needs. A well-engineered system that doesn’t support business evolution has limited value.

Key Questions

- Does the architecture support current business processes efficiently?

- Can the architecture accommodate planned business evolution?

- Are there architectural constraints limiting business options?

- How well does the architecture handle cross-cutting concerns?

- Is the architecture appropriately complex for the problem domain?

Evidence Collection Methods

Business Alignment Assessment

- Map business capabilities to technical components

- Identify business processes constrained by architecture

- Assess technical blockers for planned initiatives

Evolutionary Capability Evaluation

- Review architecture’s adaptability to change

- Assess integration capabilities for acquisitions or partnerships

- Evaluate multi-tenancy, internationalisation, and other expansion requirements

Cross-Cutting Concerns Review

- Evaluate how security, logging, and monitoring are implemented

- Assess configuration management and feature flagging

- Review error handling and resilience patterns

Complexity Assessment

- Compare architecture complexity to problem complexity

- Identify over-engineering or under-engineering

- Evaluate technology choices for appropriateness

Evaluation Criteria

| Rating | Criteria |

|---|---|

| Strong | Architecture enables business agility, supports planned evolution, appropriate complexity |

| Adequate | Architecture supports current needs, reasonable evolution path exists |

| Concerning | Architecture constrains business options, significant rework needed for evolution |

| Critical | Architecture actively impedes business, fundamental redesign required |

Dimension 7: Team and Knowledge

Investigation Focus

Technology is sustained by people. Assessing team capability, knowledge distribution, and organisational health predicts long-term sustainability.

Key Questions

- What’s the team’s capability relative to the technology stack?

- How is knowledge distributed (key person dependencies)?

- What’s the team’s capacity for learning and adaptation?

- How healthy is the development culture (collaboration, quality focus, ownership)?

- What’s the retention situation and risk?

Evidence Collection Methods

Capability Assessment

- Inventory team skills against technology requirements

- Identify capability gaps requiring hiring or training

- Assess learning velocity and technical curiosity

Knowledge Distribution Mapping

- Identify key person dependencies for critical systems

- Review documentation completeness for knowledge transfer

- Assess bus factor for critical components

Culture Evaluation

- Conduct confidential team interviews

- Review collaboration patterns and communication health

- Assess psychological safety and quality culture

Retention Risk Assessment

- Review tenure distribution and recent turnover

- Assess compensation competitiveness

- Identify flight risk for critical personnel

Evaluation Criteria

| Rating | Criteria |

|---|---|

| Strong | Deep capability, distributed knowledge, healthy culture, stable team |

| Adequate | Sufficient capability, reasonable knowledge sharing, functional culture |

| Concerning | Capability gaps, key person dependencies, cultural issues |

| Critical | Inadequate capability, single points of knowledge failure, toxic culture, imminent departures |

Conducting the Review

Preparation Phase

Scope Definition Define review boundaries clearly:

- Which systems are in scope?

- What dimensions are priorities?

- What decisions will the review inform?

- Who are the stakeholders for findings?

Information Request Request materials before beginning:

- Architecture documentation and diagrams

- Recent incident reports and post-mortems

- Test coverage and quality reports

- Deployment and release documentation

- Access to code repositories

- Monitoring dashboards and historical metrics

Interview Scheduling Plan interviews across roles:

- Technical leadership (architecture decisions, strategic context)

- Senior engineers (implementation details, technical debt)

- Operations team (production reality, incident patterns)

- Product team (business alignment, roadmap constraints)

Execution Phase

Document Review (Days 1-2)

- Analyse provided documentation

- Identify gaps and inconsistencies

- Develop targeted questions for interviews

Technical Analysis (Days 3-5)

- Run automated analysis tools

- Examine code and configuration

- Review monitoring and metrics

- Analyse deployment pipelines

Interview Execution (Days 4-7)

- Conduct structured interviews

- Probe for consistency with documentation

- Listen for what’s unsaid

Synthesis (Days 8-10)

- Correlate findings across dimensions

- Identify patterns and themes

- Develop risk ratings and recommendations

Reporting Phase

Executive Summary Provide high-level findings for leadership:

- Overall architecture health rating

- Critical risks requiring immediate attention

- Strategic recommendations

- Investment requirements

Detailed Findings Document specifics for technical teams:

- Dimension-by-dimension assessment

- Evidence supporting each finding

- Specific remediation recommendations

- Prioritised action items

Risk Register Catalogue identified risks:

- Risk description and potential impact

- Likelihood assessment

- Mitigation recommendations

- Monitoring indicators

Common Patterns and Pitfalls

Patterns Indicating Strong Architecture

- Consistent approaches across the codebase

- Clear module boundaries with minimal coupling

- Comprehensive test coverage with fast feedback

- Automated deployment with high success rates

- Distributed knowledge with good documentation

- Active technical debt management

- Security integrated throughout development

Patterns Indicating Architectural Risk

- Inconsistent patterns suggesting multiple visions

- Monolithic structure resistant to change

- Test suite that developers don’t trust

- Manual deployments with frequent failures

- Key person dependencies for critical systems

- Growing backlog of “tech debt sprints never happen”

- Security as an afterthought

Review Pitfalls to Avoid

Superficial Analysis: Don’t accept documentation at face value. Validate claims with evidence.

Technology Bias: Don’t judge architecture by technology choices alone. Appropriate boring technology often beats inappropriate modern technology.

Recency Bias: Don’t over-weight recent incidents or successes. Look for patterns over time.

Scope Creep: Don’t expand review scope mid-assessment. Note additional concerns for future review.

Confirmation Bias: Don’t let initial impressions colour evidence gathering. Seek disconfirming evidence.

Post-Review Actions

Immediate Actions (Week 1)

- Brief stakeholders on critical findings

- Address any security vulnerabilities requiring immediate action

- Establish monitoring for identified risks

Short-Term Actions (Months 1-3)

- Develop remediation roadmap for concerning findings

- Begin addressing highest-priority technical debt

- Implement improved processes where gaps exist

Long-Term Actions (Months 3-12)

- Execute strategic architecture improvements

- Build team capabilities where gaps exist

- Establish ongoing architecture governance

Conclusion

Architecture review is not a one-time event but a capability that improves with practice. This framework provides structure, but effective review requires judgment honed through experience.

The most valuable architecture reviews balance thoroughness with pragmatism. Not every concern requires remediation. Not every risk materialises. The art lies in identifying what matters most for the decisions at hand.

Whether evaluating an acquisition target, assessing inherited systems, or auditing your own architecture, systematic review transforms uncertainty into actionable intelligence. The investment in rigorous assessment pays dividends in avoided surprises and informed decisions.

Architecture debt, like financial debt, compounds over time. Regular assessment ensures you understand your position before the interest overwhelms the principal.

Sources

- Bass, L., Clements, P., & Kazman, R. (2020). Software Architecture in Practice (4th ed.). Addison-Wesley Professional.

- Ford, N., Parsons, R., & Kua, P. (2020). Building Evolutionary Architectures. O’Reilly Media.

- Richards, M., & Ford, N. (2020). Fundamentals of Software Architecture. O’Reilly Media.

- Forsgren, N., Humble, J., & Kim, G. (2020). Accelerate: The Science of Lean Software and DevOps. IT Revolution Press.

- Thoughtworks. (2025). Technology Radar. Thoughtworks. https://www.thoughtworks.com/radar

- OWASP. (2025). Application Security Verification Standard. OWASP Foundation. https://owasp.org/www-project-application-security-verification-standard/

Strategic guidance for enterprise technology leaders navigating technical due diligence.