Mitigating Potential Harms of Custom LLM Models: A Comprehensive Approach

Introduction

Custom LLM development requires careful attention to potential harms. This guide outlines a comprehensive mitigation approach.

Understanding Potential Harms

Direct Harms

- Generating harmful content

- Providing dangerous instructions

- Spreading misinformation

- Privacy violations

Indirect Harms

- Bias amplification

- Job displacement

- Manipulation and deception

- Dependency issues

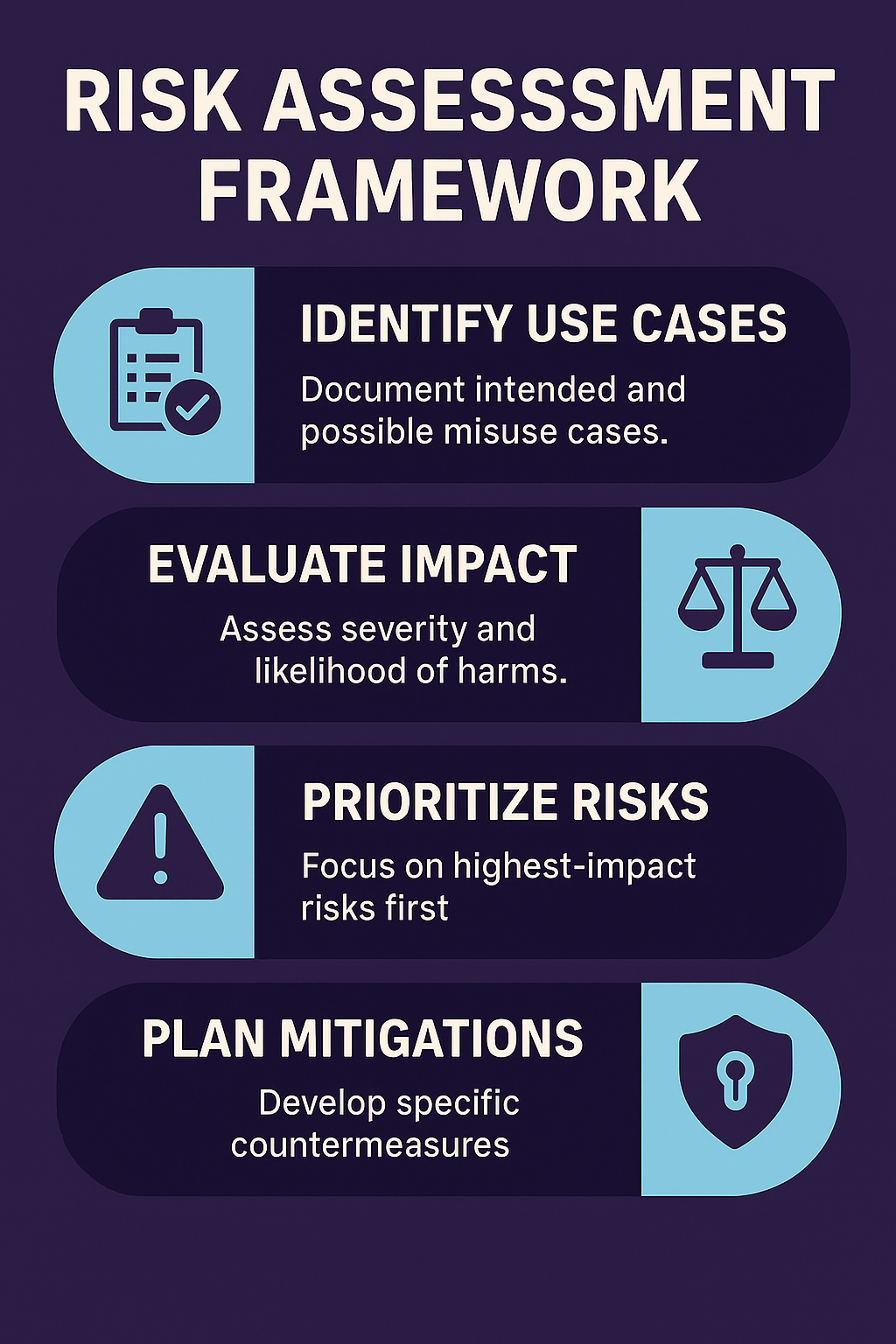

Risk Assessment Framework

Identify Use Cases

Document intended and possible misuse cases.

Evaluate Impact

Assess severity and likelihood of harms.

Prioritize Risks

Focus on highest-impact risks first.

Plan Mitigations

Develop specific countermeasures.

Technical Mitigations

Training Data Curation

- Filter harmful content

- Balance datasets

- Document data sources

Output Filtering

- Safety classifiers

- Content moderation

- Response constraints

Monitoring

- Usage logging

- Anomaly detection

- Feedback loops

Process Mitigations

Red Teaming

Deliberately test for vulnerabilities.

Human Oversight

Appropriate human involvement in decisions.

Incident Response

Plans for addressing problems.

Continuous Improvement

Learn from issues and update.

Governance Framework

Policies

Clear guidelines for development and use.

Accountability

Defined responsibilities.

Documentation

Thorough records of decisions.

External Review

Independent assessment of systems.

Best Practices

- Build safety into development from the start

- Test extensively before deployment

- Monitor continuously in production

- Respond quickly to issues

- Be transparent about limitations

Conclusion

Responsible LLM development requires proactive identification and mitigation of potential harms throughout the lifecycle.

Learn more about AI safety practices.