AI Model Governance: Enterprise MLOps for Responsible AI at Scale

Enterprise AI adoption has reached an inflection point where governance can no longer be an afterthought. Organizations operating dozens or hundreds of machine learning models face compounding risks: models drifting from intended behavior, biased outcomes affecting customers, regulatory non-compliance, and operational failures affecting business-critical processes.

For enterprise CTOs, AI model governance has become a strategic imperative. The organizations succeeding with AI at scale are those investing in governance frameworks that enable responsible deployment while maintaining innovation velocity. Those treating governance as bureaucratic overhead face mounting risks that will eventually materialize as regulatory action, reputational damage, or operational failures.

The Governance Imperative

Several converging forces make AI model governance urgent in 2025.

Regulatory Pressure

The regulatory landscape for AI has intensified significantly:

EU AI Act: The world’s first comprehensive AI regulation, entering application phases through 2025-2027, establishes requirements for high-risk AI systems including conformity assessments, risk management systems, and human oversight.

Australian AI Governance: The voluntary AI Ethics Framework is evolving toward mandatory requirements, with the Australian government consulting on regulatory options for high-risk AI applications.

Sector-Specific Regulations: Financial services, healthcare, and other regulated industries face AI-specific requirements from existing regulators. APRA’s guidance on AI and machine learning in banking, for instance, extends prudential requirements to AI systems.

US Executive Order on AI: Federal procurement requirements and agency guidelines increasingly mandate AI governance practices, affecting vendors serving government markets.

Organizations operating globally must navigate varying requirements across jurisdictions, necessitating governance frameworks flexible enough to accommodate diverse compliance obligations.

Operational Reality

Beyond regulation, operational experience reveals governance necessities:

Model Degradation: Production models degrade as data distributions shift. Without monitoring, models make increasingly poor predictions while appearing to function normally.

Incident Response: When models fail, organizations need audit trails, rollback capabilities, and clear accountability. Ad-hoc model management makes incident response chaotic.

Scaling Challenges: Practices sufficient for a few experimental models collapse under hundreds of production models. Governance frameworks enable scale.

Knowledge Management: Data scientists leave, teams reorganize, priorities shift. Institutional knowledge about model intent, training data, and deployment context must survive personnel changes.

Reputational Risk

High-profile AI failures demonstrate reputational stakes:

- Biased hiring algorithms discriminating against protected groups

- Credit scoring models perpetuating historical discrimination

- Recommendation systems amplifying harmful content

- Customer service chatbots providing dangerous advice

Each incident erodes public trust and invites regulatory scrutiny. Proactive governance reduces failure probability and demonstrates responsible practice.

Governance Framework Components

Effective AI governance integrates multiple components addressing different aspects of the model lifecycle.

Model Inventory and Classification

Organizations cannot govern what they cannot see. Model inventory establishes visibility:

Model Registry: Centralized catalog of all models in development, staging, and production. Metadata including model purpose, owner, deployment location, and status.

Classification Scheme: Risk-based classification determining governance rigor. High-risk models (affecting credit decisions, health outcomes, employment) warrant stricter oversight than low-risk models (product recommendations, internal analytics).

Lineage Tracking: Connections from models to training data, feature engineering pipelines, and parent models. Understanding provenance enables impact assessment and compliance demonstration.

Classification schemes should align with regulatory definitions where applicable. The EU AI Act’s risk categories provide a useful starting framework:

- Unacceptable Risk: Prohibited applications (social scoring, real-time biometric identification in public spaces)

- High Risk: Stringent requirements (employment, credit, education, law enforcement)

- Limited Risk: Transparency requirements (chatbots, emotion recognition)

- Minimal Risk: No specific requirements (spam filters, game AI)

Development Standards

Governance begins in model development:

Data Governance Integration: Training data must meet quality, provenance, and consent requirements. Governance frameworks connect to enterprise data governance, ensuring models use only appropriate data.

Bias Assessment: Systematic evaluation for demographic and other biases during development. Testing across protected attributes, intersectional analysis, and documentation of assessment methodology.

Documentation Requirements: Model cards or similar documentation capturing model purpose, intended use, limitations, training data characteristics, performance metrics, and fairness assessments.

Peer Review: Mandatory review of high-risk models before deployment. Review criteria addressing technical quality, business appropriateness, and ethical considerations.

Deployment Governance

Moving models to production requires controlled processes:

Approval Workflows: Stage-gate processes appropriate to model risk classification. High-risk models require senior approval and potentially ethics committee review. Lower-risk models follow streamlined processes.

Environment Standards: Production environments meeting security, reliability, and monitoring requirements. Preventing deployment of models to inadequate infrastructure.

Canary Deployments: Gradual rollout enabling early detection of production issues before full deployment.

Rollback Capability: Ability to quickly revert to previous model versions when problems emerge.

Production Monitoring

Deployed models require ongoing surveillance:

Performance Monitoring: Tracking model accuracy, precision, recall, and business-relevant metrics against baselines. Detecting degradation before it significantly impacts outcomes.

Drift Detection: Monitoring input data distributions for drift from training distributions. Feature drift often precedes model performance degradation.

Fairness Monitoring: Continuous assessment of outcomes across demographic groups. Detecting emerging bias even in initially fair models.

Explainability: Generating explanations for individual predictions, particularly for high-stakes decisions. Supporting auditability and customer communication.

Incident Management

When problems occur, governance enables effective response:

Alert Thresholds: Clear definitions of conditions triggering investigation and escalation.

Investigation Procedures: Documented processes for root cause analysis including data, code, and configuration review.

Communication Protocols: Templates and chains of communication for internal stakeholders, regulators, and affected parties.

Remediation Tracking: Ensuring identified issues are resolved and preventive measures implemented.

Model Retirement

End-of-life governance often receives insufficient attention:

Deprecation Policies: Clear criteria for model retirement and timelines for dependent systems to migrate.

Archive Requirements: Preserving model artifacts, documentation, and decision logs for compliance and historical analysis.

Data Retention: Managing training data and inference logs according to retention policies and regulatory requirements.

MLOps Infrastructure for Governance

Governance requires enabling infrastructure. MLOps platforms provide technical foundations:

Model Registries

Central repositories storing model artifacts with metadata:

- Model versioning with immutable artifacts

- Metadata including training configuration, metrics, and lineage

- Access controls restricting model deployment to authorized users

- Integration with CI/CD pipelines for automated deployment

MLflow Model Registry, Weights & Biases, and cloud-native options (AWS SageMaker Model Registry, Azure ML Model Registry, Vertex AI Model Registry) provide these capabilities.

Feature Stores

Centralized feature management supporting governance:

- Feature definitions ensuring consistent use across models

- Feature versioning enabling reproducibility

- Feature lineage tracking provenance to source data

- Access controls enforcing data governance policies

Feast, Tecton, and cloud-native feature stores address these requirements.

Experiment Tracking

Systematic capture of model development:

- Hyperparameter logging for reproducibility

- Metric tracking for comparison

- Artifact storage linking code, data, and model versions

- Collaboration features for team workflows

Monitoring Platforms

Production observability infrastructure:

- Real-time metric collection and visualization

- Anomaly detection for automated alerting

- Dashboards for operational visibility

- Integration with incident management systems

Purpose-built ML monitoring (Arize, Fiddler, WhyLabs) complements general observability platforms.

Orchestration Systems

Workflow automation enabling consistent processes:

- Training pipelines ensuring reproducible model creation

- Deployment pipelines enforcing approval gates

- Retraining automation triggered by drift detection

- Audit logging for compliance demonstration

Kubeflow, Apache Airflow, and cloud-native orchestration services provide workflow capabilities.

Organizational Structure

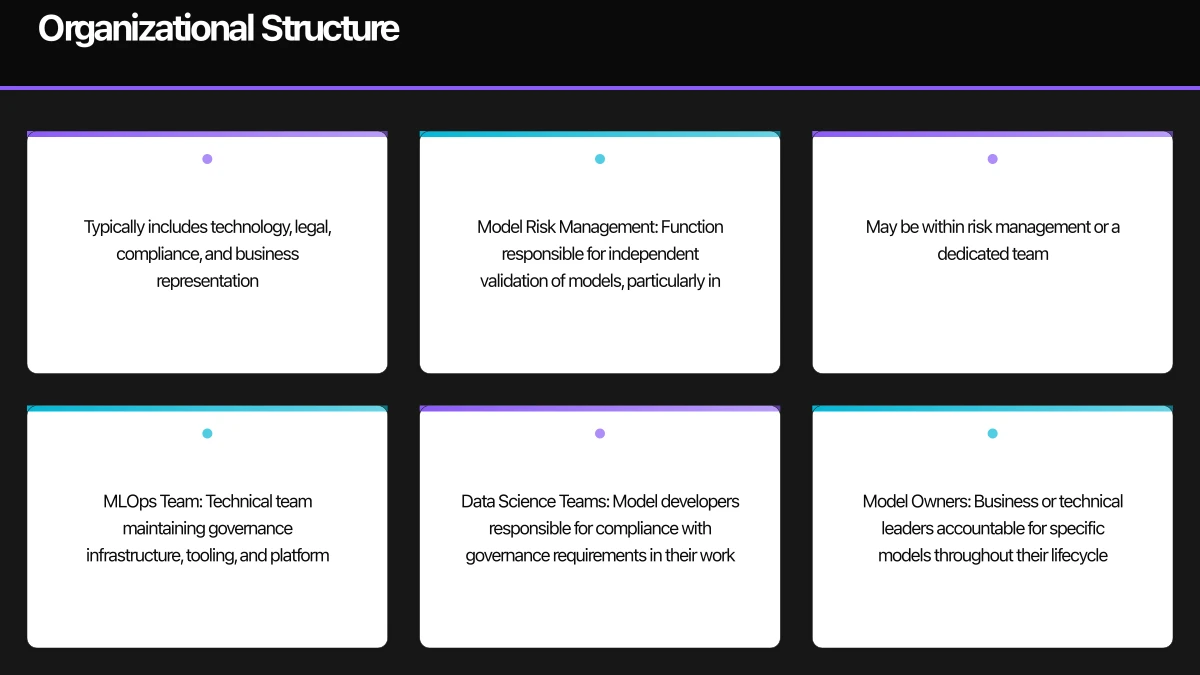

Governance requires clear organizational accountability:

Roles and Responsibilities

AI Ethics Committee: Cross-functional body reviewing high-risk AI applications, setting policy, and advising on ethical considerations. Typically includes technology, legal, compliance, and business representation.

Model Risk Management: Function responsible for independent validation of models, particularly in regulated industries. May be within risk management or a dedicated team.

MLOps Team: Technical team maintaining governance infrastructure, tooling, and platform capabilities.

Data Science Teams: Model developers responsible for compliance with governance requirements in their work.

Model Owners: Business or technical leaders accountable for specific models throughout their lifecycle.

RACI Clarification

Clear responsibility assignment prevents gaps:

| Activity | Data Scientist | Model Owner | MLOps | Risk Mgmt | Ethics Committee |

|---|---|---|---|---|---|

| Model Development | R | A | C | I | I |

| Bias Assessment | R | A | C | C | C |

| Deployment Approval | I | R | C | A (high-risk) | A (high-risk) |

| Production Monitoring | C | A | R | I | I |

| Incident Response | R | A | R | C | C |

(R = Responsible, A = Accountable, C = Consulted, I = Informed)

Skills and Capabilities

Effective governance requires capabilities often underdeveloped in organizations:

Regulatory Expertise: Understanding evolving AI regulations across relevant jurisdictions.

Ethics Training: Equipping data scientists to identify and address ethical considerations in their work.

MLOps Engineering: Building and operating governance infrastructure.

Risk Assessment: Evaluating model risks in business and regulatory context.

Implementation Approach

Establishing AI governance requires phased implementation:

Phase 1: Foundation

Inventory and Assessment: Catalog existing models, assess risk levels, identify immediate gaps.

Policy Development: Establish governance policies appropriate to organizational context and risk profile.

Quick Wins: Implement basic monitoring for production models, establish documentation requirements for new development.

Phase 2: Infrastructure

Platform Selection: Choose and implement MLOps platforms supporting governance requirements.

Process Integration: Embed governance into development and deployment workflows.

Training and Enablement: Build organizational capability through training and documentation.

Phase 3: Maturation

Advanced Capabilities: Implement sophisticated monitoring, automated drift detection, and comprehensive lineage.

Continuous Improvement: Refine processes based on operational experience and evolving requirements.

External Validation: Third-party assessment of governance maturity.

Balancing Governance and Velocity

A common concern: won’t governance slow innovation? The relationship is more nuanced:

Governance Enables Speed

Mature governance actually accelerates AI delivery:

- Reusable Components: Governed feature stores and model templates reduce redundant development.

- Faster Approvals: Clear criteria and automated checks speed appropriate approvals versus ad-hoc review.

- Reduced Rework: Catching issues early prevents costly production failures and emergency remediation.

- Stakeholder Confidence: Demonstrated governance builds trust enabling larger investments and expanded scope.

Risk-Proportionate Governance

Governance rigor should match risk:

- Experimentation: Minimal governance for sandboxed exploration that cannot impact production.

- Internal Analytics: Moderate governance for models informing but not automating decisions.

- Production Automation: Rigorous governance for models making autonomous decisions affecting customers or operations.

Automation Over Bureaucracy

Governance should automate wherever possible:

- Automated bias testing in CI/CD pipelines

- Automated documentation generation from code and metadata

- Automated drift detection and alerting

- Automated compliance reporting

Manual review focuses on genuinely judgment-requiring decisions rather than mechanical checks.

Measuring Governance Effectiveness

Governance programs require metrics demonstrating value:

Compliance Metrics

- Documentation Completeness: Percentage of production models with complete documentation

- Policy Adherence: Models deployed following required approval processes

- Audit Readiness: Ability to produce required evidence within defined timeframes

Operational Metrics

- Time to Detection: Duration from problem occurrence to alert

- Time to Resolution: Duration from alert to remediation

- Model Freshness: Age of production models versus retraining schedules

- Drift Frequency: How often models require intervention

Business Metrics

- Incident Frequency: Production AI incidents over time

- Regulatory Findings: Audit findings related to AI governance

- Velocity Impact: Time from model development to production deployment

Common Challenges

Legacy Models

Organizations often have models deployed before governance was established. Retroactive documentation, assessment, and monitoring present resource challenges. Prioritize by risk and criticality.

Data Scientist Resistance

Governance perceived as bureaucracy faces adoption challenges. Emphasize enabling aspects, provide good tooling, and ensure governance adds value rather than just overhead.

Evolving Regulations

Regulatory uncertainty makes comprehensive compliance difficult. Build adaptable frameworks rather than point solutions to specific requirements.

Tool Fragmentation

Many tools address pieces of the governance puzzle. Integration challenges and skill proliferation create operational burden. Platform consolidation and clear architecture reduce complexity.

Future Considerations

Several trends will shape AI governance evolution:

Automated Governance: AI assisting AI governance through automated bias detection, documentation generation, and compliance assessment.

Regulatory Harmonization: Global AI governance frameworks may eventually converge, simplifying multinational compliance.

Third-Party Validation: Independent AI audit and certification services emerging to provide external governance assurance.

Foundation Model Governance: Specific governance challenges for organizations building on large language models and other foundation models, including supply chain governance for model components.

Strategic Recommendations

For enterprise CTOs establishing AI governance:

-

Start Now: Governance debt compounds. Beginning governance practice before regulatory deadlines creates advantage.

-

Risk-Stratify: Focus governance investment on highest-risk applications while maintaining lightweight processes for lower-risk use cases.

-

Invest in Infrastructure: Governance without enabling platforms becomes manual burden. MLOps infrastructure pays dividends.

-

Embed in Culture: Governance succeeds when data scientists see it as enabling rather than constraining their work.

-

Measure and Improve: Governance programs require metrics demonstrating value and identifying improvement opportunities.

Conclusion

AI model governance has transitioned from best practice to business imperative. Regulatory requirements, operational risks, and stakeholder expectations demand systematic governance of AI systems. Organizations investing in governance frameworks now will be better positioned for regulatory compliance, operational excellence, and responsible AI leadership.

The organizations that master AI governance will deploy AI more confidently, at greater scale, with reduced risk. Those that defer governance will face mounting technical debt, regulatory exposure, and potential incidents that undermine AI initiatives entirely.

References and Further Reading

- European Commission. (2024). “Artificial Intelligence Act.” Official Journal of the European Union.

- NIST. (2024). “AI Risk Management Framework.” National Institute of Standards and Technology.

- Australian Government. (2024). “AI Ethics Framework.” Department of Industry, Science and Resources.

- Google. (2025). “Responsible AI Practices.” Google AI.

- Microsoft. (2025). “Responsible AI Standard.” Microsoft.

- Gartner. (2025). “Market Guide for AI Governance Platforms.” Gartner Research.

- Partnership on AI. (2024). “Model Cards for Model Reporting.” Partnership on AI.